电脑里没有pandas库包。或者没导入成功

NA前面不能有空格,你可以看一下老师的源代码

![]() 太牛啦兄弟!!!!we like it

太牛啦兄弟!!!!we like it

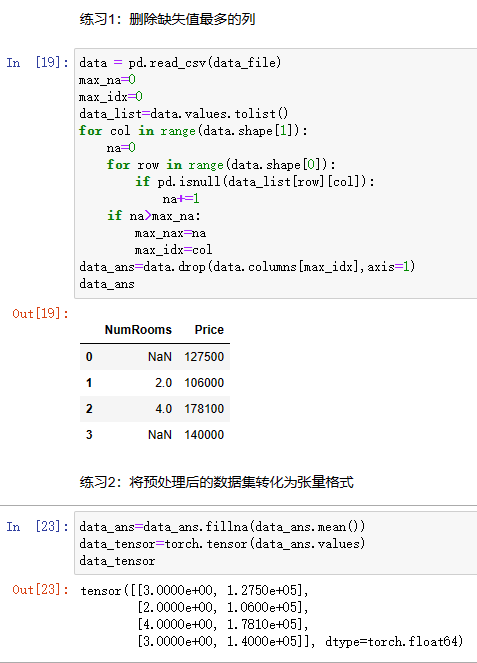

统计某一列的NaN 数据的数量

nan_col_statistics = data.isna().sum()

获取统计结果中最大值的列名

max_col_name = nan_col_statistics.idxmax()

data.drop([max_col_name], axis = 1)

想问一下大家这个问题该如何处理

import os

os.makedirs(os.path.join(‘…’, ‘data’), exist_ok=True)

Traceback (most recent call last):

File “”, line 1, in

File “C:\conda\envs\d2l\lib\os.py”, line 223, in makedirs

mkdir(name, mode)

PermissionError: [WinError 5] 拒绝访问。: ‘…\data’

data_file = os.path.join(‘…’, ‘data’, ‘house_tiny.csv’)

with open(data_file, ‘w’) as f:

… f.write(‘NumRooms,Alley,Price\n’) # 列名

… f.write(‘NA,Pave,127500\n’) # 每行表示一个数据样本

… f.write(‘2,NA,106000\n’)

… f.write(‘4,NA,178100\n’)

… f.write(‘NA,NA,140000\n’)

…

Traceback (most recent call last):

File “”, line 1, in

FileNotFoundError: [Errno 2] No such file or directory: ‘…\data\house_tiny.csv’

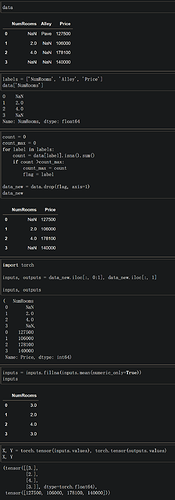

各位大佬,请问下面的代码为何没能像书中的例子那样将Name列自动转换成两列(Name_Tom, Name_nan)?

os.makedirs(os.path.join('.','data'),exist_ok=True)

datafile = os.path.join('.','data','house_tiny.csv')

with open(datafile,'w') as f:

f.write('NumRoom,Name,Price\n')

f.write('12,Tom,223\n')

f.write('NA,NA,276\n')

f.write('81,NA,654\n')

f.write('92,NA,NA\n')

f.write('76,NA,128\n')

input, output = data.iloc[:,0:2], data.iloc[:,2]

input = input.fillna(input.mean())

output = output.fillna(output.mean())

inputs = pd.get_dummies(input,dummy_na=True)

print(input)

-------运行结果--------

NumRoom Name

0 12.00 Tom

1 65.25 NaN

2 81.00 NaN

3 92.00 NaN

4 76.00 NaN

\data\house_tiny.csv前面应该是两个点

你可以这样运行一下试试,我调整了你的变量命名,我觉得可能的原因是本身input是已被命名的函数名

os.makedirs(os.path.join(’.’,‘data’),exist_ok=True)

datafile = os.path.join(’.’,‘data’,‘house_tiny.csv’)

with open(datafile,‘w’) as f:

f.write(‘NumRoom,Name,Price\n’)

f.write(‘12,Tom,223\n’)

f.write(‘NA,NA,276\n’)

f.write(‘81,NA,654\n’)

f.write(‘92,NA,NA\n’)

f.write(‘76,NA,128\n’)

inputs, outputs = data.iloc[:,0:2], data.iloc[:,2]

inputs = inputs.fillna(input.mean())

outputs = output.fillna(output.mean())

inputs = pd.get_dummies(input,dummy_na=True)

print(inputs)

谢谢.

发现我打印的是原始的input,应该是inputs.

inputs = pd.get_dummies(input,dummy_na=True)的计算结果代入的是inputs

为什么在pandas这章,最后一段代码中X, y = torch.tensor(inputs.values), torch.tensor(outputs.values)这里X会提示数据类型为float64,而如果直接创建torch.tensor([1.0,2.0]),则是float32类型?

交作业

def delete_max_na(data):

null_count_ser = data.isna().sum()

data_pd = data.dropna(axis=1,thresh=data.shape[0]-null_count_ser.max()+1)

data_np = np.array(data_pd)

return torch.tensor(data_np)

交作业,干劲满满

题一:

# 我创建的文件

import os

import torch

os.makedirs(os.path.join('..', 'data'), exist_ok=True)

data_file2 = os.path.join('..', 'data', 'house_tiny.csv')

with open(data_file2, 'w') as f:

f.write('name,age,grade,number\n') # 列名

f.write('li,12,NaN,123\n') # 每行表示一个数据样本

f.write('wang,11,NaN,234\n')

f.write('liao,NaN,NaN,NaN\n')

f.write('zhou,15,9,NaN\n')

#读取文件

import pandas as pd

from pandas import DataFrame as df

data2 = pd.read_csv(data_file2)

print(data2)

#查看每一列的缺失值的数量

data2.shape[1]

nan_num = data2.isnull().sum()

nan_num

#找出缺失值最大的列

nan_max_id = nan_num.idxmax()

nan_max_id #运行结果grade

#删除该列

data3 =data2.drop([nan_max_id], axis=1)

题二:

import torch

data3 = pd.get_dummies(data3, dummy_na=True)

print(data3)

z= torch.tensor(data3.values)

z

Z = data.iloc[:, 0:2]

print(Z)

max_nan_name = Z.keys()[np.argmax(np.array(Z.isna().sum().values))]

Z.drop(max_nan_name, axis=1, inplace=True)

print(Z)

NumRooms Alley

0 NaN Pave

1 2.0 NaN

2 4.0 NaN

3 NaN NaN

NumRooms

0 NaN

1 2.0

2 4.0

3 NaN

作业:选出NA最多的列并删除

data = pd.read_csv(data_file)

print('pre-',data)

NA_num = sum(data.isna().values)#统计各列NA的个数,生成一维array

data = data.drop(data.columns[np.argmax(NA_num)],axis = 1)#舍去最多NA的列

print('post-',data)

import re

import csv

# 将复制得到的txt数据装换为csv文件

input_PATH = os.path.join('..','data','ONIs.txt')

output_PATH = os.path.join('..','data','ONIs.csv')

# Open the input text file for reading

with open(input_PATH, 'r') as infile:

# Open the output CSV file for writing

with open(output_PATH, 'w', newline='') as outfile:

# Create a CSV writer object

writer = csv.writer(outfile)

# Loop over the input file, Spliting the line by any amount of whitespace writing it to the output CSV file

for line in infile:

writer.writerow(re.split(r'\s+', line.strip()))# line.strip()表示去掉这一行前后的空白,re.split(r'\s+')将一行

# 按照不确定数量的空格分隔开

import random

# 将得到的CSV文件随机100个取Na值(除了表头)

df = pd.read_csv(output_PATH)

for i in range(1,101):

row = random.randint(1,df.shape[0]-1)

col = random.randint(1,df.shape[1]-1)

df.iloc[row,col] = None

df

# 删除缺失值最多的列

col_with_most_nulls = df.isna().sum().idxmax()

print(col_with_most_nulls)

df.drop(col_with_most_nulls, axis=1, inplace=True)

df

# 转换为张量格式

tensors = torch.tensor(df.values)

tensors

按照你的思路查了一下,可以这样

data = data.drop(pd.isna(data).sum(axis=0).idxmax(), axis=1)

new_series = data.count()

max = new_series.argmin()

print(max)

for item in new_series.index:

if new_series[item] == max:

data=data.drop(columns=item)

print(data)