My attempts :

Exercises

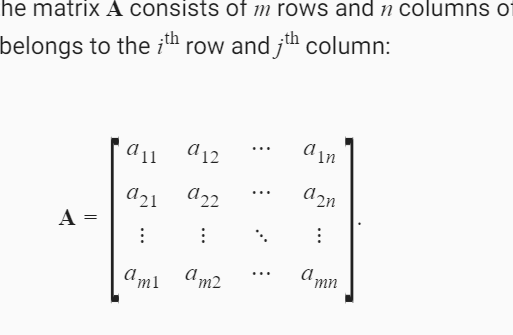

1. Prove that the transpose of the transpose of a matrix is the matrix itself: $ (\mathbf{A}^\top)^\top = \mathbf{A} $

The $ (i, j)^{th} $ element of A corresponds to $ (j, i)^{th} $ element of $ \mathbf{A}^{\top} $, so the $ (i, j)th $ element of $ (\mathbf{A}^{\top})^{\top} $ will correspond to the $ (j, i)^{th} $ element of $ \mathbf{A}^{\top} $, which corresponds to the $ (i, j)^{th} $ element of $ \mathbf{A} $; therefore the two will be equal

2. Given two matrices $ mathbf{A} $ and $ mathbf{B} $, show that sum and transposition commute: $ \mathbf{A}^\top + \mathbf{B}^\top = (\mathbf{A} + \mathbf{B})^\top $.

$ (j, i)^{th} $ element of $ \mathbf{A}^\top $ + $ (j, i)^{th} $ element of $ \mathbf{B}^\top $ are equal to the $ ( \mathbf{i}, \mathbf{j})^{th} $ element of $ \mathbf{A} + \mathbf{B} $, therefore the two will be equal if we transpose $ \mathbf{A} + \mathbf{B} $

3. Given any square matrix $ \mathbf{A} $ , is $ \mathbf{A} $ + $ \mathbf{A}^{\top} $ always symmetric? Can you prove the result by using only the result of the previous two exercises?

Yes, since we’re literally just asking whether $ (x) + (y) == x + y $. How? Both , the $ (i, j)^{th} $ and $ (j, i)^{th} $ values of any matrix $ \mathbf{A} $ are being added to each other, making them the same, leading to symmetricity

4. We defined the tensor $ \mathsf{X} $ of shape (2, 3, 4) in this section. What is the output of len(X)? Write your answer without implementing any code, then check your answer using code.

2, since we’re taking the length of a “list” that contains elements of shape (3, 4).

X = torch.arange(2 * 3 * 4, dtype = torch.float32).reshape(2, 3, 4)

len(X)

2

5. For a tensor $ \mathsf{X} $ of arbitrary shape, does len(X) always correspond to the length of a certain axis of $ \mathsf{X} $? What is that axis?

Yes, axis 0

6. Run A / A.sum(axis=1) and see what happens. Can you analyze the reason?

A / A.sum(axis=1)

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Input In [68], in <cell line: 1>()

----> 1 A / A.sum(axis=1)

RuntimeError: The size of tensor a (3) must match the size of tensor b (2) at non-singleton dimension 1

The reason is that the shapes completely mismatch : the very order of the tensors themselves is incompatible, so broadcasting won’t save us

7. When traveling between two points in downtown Manhattan, what is the distance that you need to cover in terms of the coordinates, i.e., in terms of avenues and streets? Can you travel diagonally?

I don’t know what avenues are  . But I believe we travel along streets/roads that lead us to our destination. We can’t travel diagonally since there will be things like houses and other buildings/parks/private properties getting in the way

. But I believe we travel along streets/roads that lead us to our destination. We can’t travel diagonally since there will be things like houses and other buildings/parks/private properties getting in the way

8. Consider a tensor with shape (2, 3, 4). What are the shapes of the summation outputs along axis 0, 1, and 2?

X.sum(axis = 0).shape, X.sum(axis = 1).shape, X.sum(axis = 2).shape

(torch.Size([3, 4]), torch.Size([2, 4]), torch.Size([2, 3]))

The axis along which we reduced got destroyed

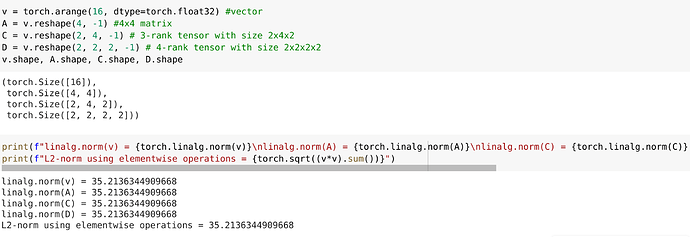

9. Feed a tensor with 3 or more axes to the linalg.norm function and observe its output. What does this function compute for tensors of arbitrary shape?

X, torch.linalg.norm(X)

(tensor([[[ 0., 1., 2., 3.],

[ 4., 5., 6., 7.],

[ 8., 9., 10., 11.]],

[[12., 13., 14., 15.],

[16., 17., 18., 19.],

[20., 21., 22., 23.]]]),

tensor(65.7571))

Sum = 0

for item in X.flatten():

Sum += item ** 2

torch.sqrt(Sum)

tensor(65.7571)

Looks like it simply sums the square of all the elements inside the tensor then takes square root

10. Define three large matrices, say $ \mathbf{A} \in \mathbb{R}^{2^{10} \times 2^{16}} $, $ \mathbf{B} \in \mathbb{R}^{2^{16} \times 2^{5}} $ and $ \mathbf{C} \in \mathbb{R}^{2^{5} \times 2^{16}} $, for instance initialized with Gaussian random variables. You want to compute the product . Is there any difference in memory footprint and speed, depending on whether you compute $ (\mathbf{A} \mathbf{B}) \mathbf{C} $ or $ \mathbf{A} (\mathbf{B} \mathbf{C}) $. Why?

$ (\mathbf{A} \mathbf{B}) \mathbf{C} $ will result in time being $ \mathcal{O}(2^{10} \times 2^{16} \times 2^5 + 2^{10} \times 2^{5} \times 2^{16} ) $

$ \mathbf{A} (\mathbf{B} \mathbf{C}) $ will result in time being $ \mathcal{O}(2^{10} \times 2^{16} \times 2^{16} + 2^{16} \times 2^{5} \times 2^{16} ) $

The latter is much much more expensive because of the algorithms being designed to have complexity of $ \mathcal{O}(n \times m \times k) $ where the matrices $ A $ and $ B $ are of size $ \mathbb{R}^{n \times m} $ and $ \mathbb{R}^{m \times k} $ respectively

11. Define three large matrices, say $ \mathbf{A} \in \mathbb{R}^{2^{10} \times 2^{16}} $ , $ \mathbf{B} \in \mathbb{R}^{2^{16} \times 2^{5}} $ and $ \mathbf{C} \in \mathbb{R}^{2^{5} \times 2^{16}} $. Is there any difference in speed depending on whether you compute $ \mathbf{A} \mathbf{B} $ or $ \mathbf{A} \mathbf{C}^\top $? Why? What changes if you initialize $ \mathbf{C} = \mathbf{B}^\top $ without cloning memory? Why?

A = torch.randn( (2 ** 10, 2 ** 16) )

B = torch.randn( (2 ** 16, 2 ** 5) )

C = torch.randn( (2 ** 5, 2 ** 16) )

%time

temp = A @ B

CPU times: user 2 µs, sys: 0 ns, total: 2 µs

Wall time: 5.96 µs

%time

temp = A @ C.T

CPU times: user 2 µs, sys: 0 ns, total: 2 µs

Wall time: 5.48 µs

%time

temp = A @ B.T

CPU times: user 2 µs, sys: 0 ns, total: 2 µs

Wall time: 5.25 µs

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Input In [101], in <cell line: 3>()

1 get_ipython().run_line_magic('time', '')

----> 3 temp = A @ B.T

RuntimeError: mat1 and mat2 shapes cannot be multiplied (1024x65536 and 32x65536)

$ \mathbf{A} \times \mathbf{C}^{\top} $ yields better performance due to the layout of data in memory : since the row major format in which data is usually stored in torch usually prefers memory accesses of the same row, when you take transpose of $ \mathbf{C}^{\top} $, it’s not really taking a physical transpose, but a logical one, meaning when we index the trasposes matrix at $ (i, j) $, it just gets internally converted to $ (j, i) $ of the matrix before transposition. Since the elements of the second matrix are accessed column-wise, it is inefficient for this task, but if we have it logically transposed, then the accesses become efficent again, since the data is logically being accessed across rows, ie, columns-wise, but is physically geting accessed across columns, ie, row-wise, since we didn’t actually perform the element swaps, only decided to change indexing under the hood. Hence, the transpose technique works faster

I just don’t understand what is being asked about transposing $ \mathbf{B}^{\top} $. Now the resulting matrix can’t be multiplied with $ \mathbf{A} $ since their shapes are incompatible

12. Define three matrices, say $ \mathbf{A}, \mathbf{B}, \mathbf{C} \in \mathbb{R}^{100 \times 200} $. Constitute a tensor with 3 axes by stacking $ [\mathbf{A}, \mathbf{B}, \mathbf{C}] $ . What is the dimensionality? Slice out the second coordinate of the third axis to recover $\mathbf{B} $ . Check that your answer is correct.

A = torch.randn( (100, 200) )

B = torch.randn( (100, 200) )

C = torch.randn( (100, 200) )

stacked = torch.stack([ A, B, C ])

print(f"{stacked.shape = }")

stacked [ 1 ] == B

stacked.shape = torch.Size([3, 100, 200])

tensor([[True, True, True, ..., True, True, True],

[True, True, True, ..., True, True, True],

[True, True, True, ..., True, True, True],

...,

[True, True, True, ..., True, True, True],

[True, True, True, ..., True, True, True],

[True, True, True, ..., True, True, True]])

I have no idea why the latex rendering isn’t working

@goldpiggy @mli sorry for pinging like this but can anything be done about this? It’s very disheartening to see all my efforts to write equations in an aesthetically pleasing way turn out like this

Thanks in advance

Edit : I found a link to a post where there seems to be an official plugin to enable latex support. Maybe that can help? Discourse Math - plugin - Discourse Meta