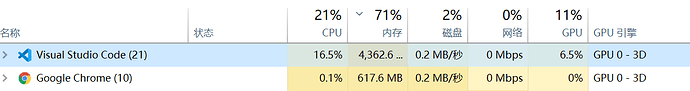

我这跑完要这么久,而且感觉看gpu利用率没有多高

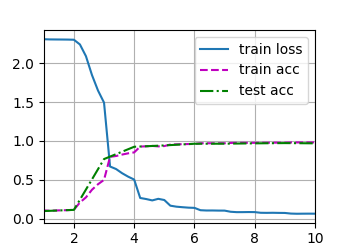

loss0.464,train acc 0.825,test acc 0.808

76627.1 examples/sec on cuda:0

正如soree_yo所说的,你需要

在Animator类的add()方法的倒数第二行上面加上

plt.draw(),

plt.pause(0.001)

然后再train_ch6中,即最后一行添加plt.show()即可解决

In my experience, two linear layers, 20 epoch get the best performance. I can not explain, but I guess it fit

the data very well.

需要对李沐老师的Animator类进行一定的修改才能在Pycharm比较好的运行。

我把代码贴在了CSDN上,需要自取:

其中主要修改的是Animator类,将我修改后的Animator类替换李沐老师的Animator类,并在构造的时候使用 animator = Animator(legend=[‘train loss’, ‘train acc’, ‘test acc’])

另外,在最后要显示的时候,调用 animator.show()即可。

Empty Traceback (most recent call last)

File D:\miniconda\envs\d2l\lib\site-packages\torch\utils\data\dataloader.py:1163, in _MultiProcessingDataLoaderIter._try_get_data(self, timeout)

1162 try:

→ 1163 data = self._data_queue.get(timeout=timeout)

1164 return (True, data)

File D:\miniconda\envs\d2l\lib\multiprocessing\queues.py:108, in Queue.get(self, block, timeout)

107 if not self._poll(timeout):

→ 108 raise Empty

109 elif not self._poll():

Empty:

The above exception was the direct cause of the following exception:

RuntimeError Traceback (most recent call last)

Input In [6], in <cell line: 2>()

1 lr, num_epochs = 0.9, 10

----> 2 train_ch6(net, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

Input In [5], in train_ch6(net, train_iter, test_iter, num_epochs, lr, device)

17 metric = d2l.Accumulator(3)

18 net.train()

—> 19 for i, (X, y) in enumerate(train_iter):

20 timer.start()

21 optimizer.zero_grad()

File D:\miniconda\envs\d2l\lib\site-packages\torch\utils\data\dataloader.py:681, in _BaseDataLoaderIter.next(self)

678 if self._sampler_iter is None:

679 # TODO(Bug in dataloader iterator found by mypy · Issue #76750 · pytorch/pytorch · GitHub)

680 self._reset() # type: ignore[call-arg]

→ 681 data = self._next_data()

682 self._num_yielded += 1

683 if self._dataset_kind == _DatasetKind.Iterable and

684 self._IterableDataset_len_called is not None and

685 self._num_yielded > self._IterableDataset_len_called:

File D:\miniconda\envs\d2l\lib\site-packages\torch\utils\data\dataloader.py:1359, in _MultiProcessingDataLoaderIter._next_data(self)

1356 return self._process_data(data)

1358 assert not self._shutdown and self._tasks_outstanding > 0

→ 1359 idx, data = self._get_data()

1360 self._tasks_outstanding -= 1

1361 if self._dataset_kind == _DatasetKind.Iterable:

1362 # Check for _IterableDatasetStopIteration

File D:\miniconda\envs\d2l\lib\site-packages\torch\utils\data\dataloader.py:1325, in _MultiProcessingDataLoaderIter._get_data(self)

1321 # In this case, self._data_queue is a queue.Queue,. But we don’t

1322 # need to call .task_done() because we don’t use .join().

1323 else:

1324 while True:

→ 1325 success, data = self._try_get_data()

1326 if success:

1327 return data

File D:\miniconda\envs\d2l\lib\site-packages\torch\utils\data\dataloader.py:1176, in _MultiProcessingDataLoaderIter._try_get_data(self, timeout)

1174 if len(failed_workers) > 0:

1175 pids_str = ', '.join(str(w.pid) for w in failed_workers)

→ 1176 raise RuntimeError(‘DataLoader worker (pid(s) {}) exited unexpectedly’.format(pids_str)) from e

1177 if isinstance(e, queue.Empty):

1178 return (False, None)

RuntimeError: DataLoader worker (pid(s) 15336, 3572) exited unexpectedly

为什么报错了呀

因为学习率太大了啦,lr换成0.1或者用Adam(后面的内容)会有一个显著的提升,学习率过大导致参数一直在目标附近来回震荡而落不下去。

excellent replies! I understand now

Q1: 将平均汇聚层替换为最大汇聚层,会发生什么?

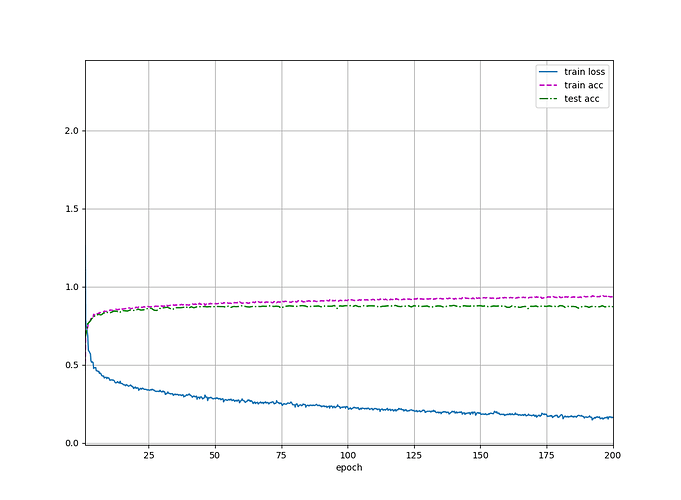

A1: 简单进行了对比,可以看到相较于Max Pooling,Avg Pooling的过拟合程度更小,同时测试集的分类准确率要高一些。

Avg Pooling, epoch 10, lr 0.9: loss 0.465, train acc 0.825, test acc 0.793

Max Pooling, epoch 10, lr 0.9: loss 0.432, train acc 0.838, test acc 0.776

Avg Pooling, epoch 20, lr 0.9: loss 0.356, train acc 0.867, test acc 0.857

Max Pooling, epoch 20, lr 0.9: loss 0.316, train acc 0.883, test acc 0.849

Q2: 尝试构建一个基于LeNet的更复杂的网络,以提高其准确性。

# 模型构造如下

changed_net = nn.Sequential(

nn.Conv2d(1, 8, kernel_size=5, padding=2), nn.ReLU(),

nn.AvgPool2d(kernel_size=2, stride=2),

# nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(8, 16, kernel_size=3, padding=1), nn.ReLU(),

nn.AvgPool2d(kernel_size=2, stride=2),

# nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(16, 32, kernel_size=3, padding=1), nn.ReLU(),

nn.AvgPool2d(kernel_size=2, stride=2),

# nn.MaxPool2d(kernel_size=2, stride=2),

nn.Flatten(),

nn.Linear(32 * 3 * 3, 128), nn.Sigmoid(),

nn.Linear(128, 64), nn.Sigmoid(),

nn.Linear(64, 32), nn.Sigmoid(),

nn.Linear(32, 10)

)

网络结构如下

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 8, 28, 28] 208

ReLU-2 [-1, 8, 28, 28] 0

AvgPool2d-3 [-1, 8, 14, 14] 0

Conv2d-4 [-1, 16, 14, 14] 1,168

ReLU-5 [-1, 16, 14, 14] 0

AvgPool2d-6 [-1, 16, 7, 7] 0

Conv2d-7 [-1, 32, 7, 7] 4,640

ReLU-8 [-1, 32, 7, 7] 0

AvgPool2d-9 [-1, 32, 3, 3] 0

Flatten-10 [-1, 288] 0

Linear-11 [-1, 128] 36,992

Sigmoid-12 [-1, 128] 0

Linear-13 [-1, 64] 8,256

Sigmoid-14 [-1, 64] 0

Linear-15 [-1, 32] 2,080

Sigmoid-16 [-1, 32] 0

Linear-17 [-1, 10] 330

================================================================

Total params: 53,674

Trainable params: 53,674

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.19

Params size (MB): 0.20

Estimated Total Size (MB): 0.40

----------------------------------------------------------------

Avg Pooling, epoch 20, lr 0.6: loss 0.308, train acc 0.885, test acc 0.863

Q3: 在MNIST数据集上尝试以上改进的网络。

# 使用Mnist数据集

trans = transforms.ToTensor()

mnist_train = torchvision.datasets.MNIST(

root="../data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.MNIST(

root="../data", train=False, transform=trans, download=True)

train_iter = data.DataLoader(mnist_train, batch_size, shuffle=True,

num_workers=d2l.get_dataloader_workers())

test_iter = data.DataLoader(mnist_test, batch_size, shuffle=True,

num_workers=d2l.get_dataloader_workers())

最终结果:Avg Pooling, epoch 10, lr 0.6: loss 0.064, train acc 0.981, test acc 0.970

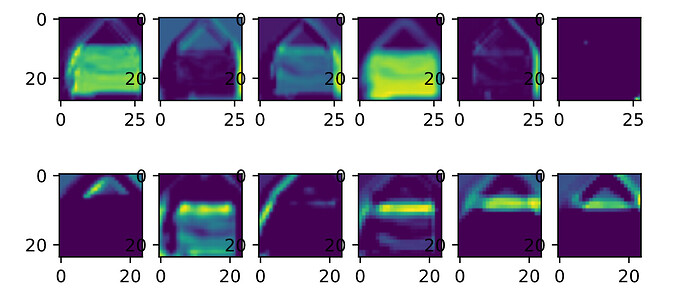

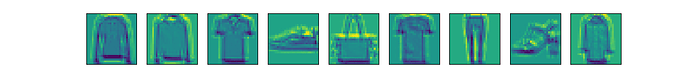

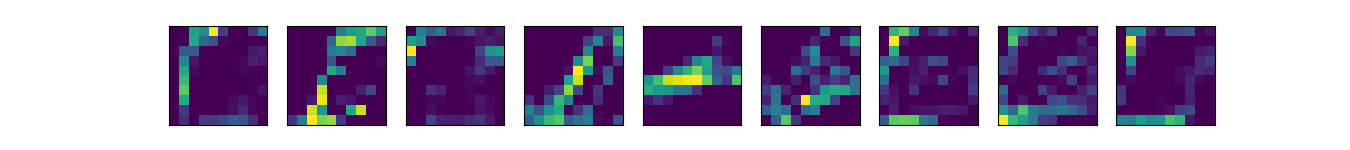

Q4: 显示不同输入(例如毛衣和外套)时,LeNet第一层和第二层的激活值。

在train_ch6中的X, y = X.to(device), y.to(device)和 y_hat = net(X)之间,添加如下代码

x_first_Sigmoid_layer = net[0:2](X)[0:9, 1, :, :]

d2l.show_images(x_first_Sigmoid_layer.reshape(9, 28, 28).cpu().detach(), 1, 9)

x_second_Sigmoid_layer = net[0:5](X)[0:9, 1, :, :]

d2l.show_images(x_second_Sigmoid_layer.reshape(9, 10, 10).cpu().detach(), 1, 9)

# d2l.plt.show()

经Sigmoid1(上)和Sigmoid2(下)之后的图像分别如下:

应该是加在epochs循环之后吧,不然每次都打印一下两个激活层。

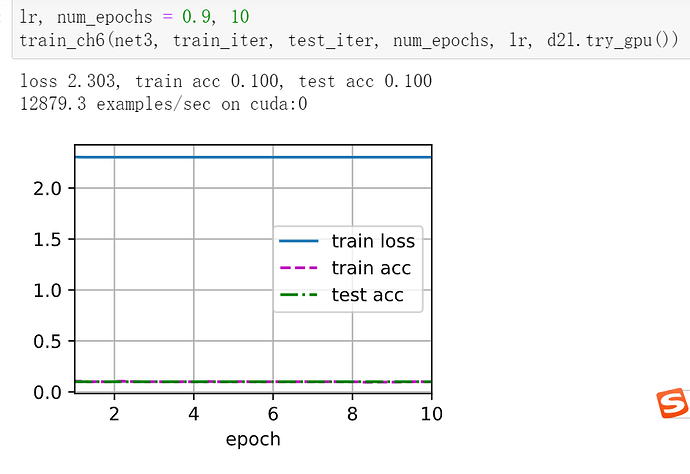

net3= nn.Sequential(

nn.Conv2d(1,6,kernel_size=5,padding=2),nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2),

nn.Conv2d(6,16,kernel_size=5),nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2),

nn.Flatten(),

nn.Linear(1655,120),nn.ReLU(),

nn.Linear(120,84),nn.ReLU(),

nn.Linear(84,10)

)

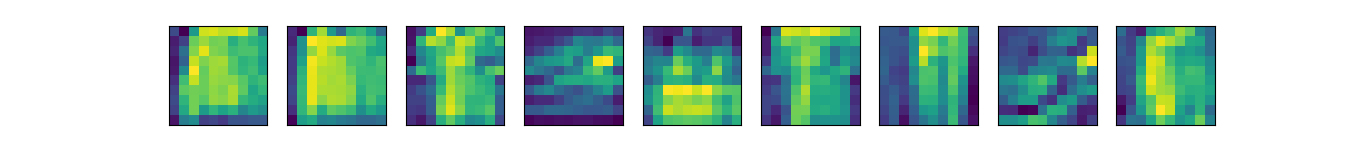

把sigmod函数修改为ReLU函数后为什么是这样

但是仅仅把卷积层后面的sigmod函数修改ReLU后效果要比原来好点

使用 import matplotlib.pyplot后,再使用plt.show()即可显示图像

我也是这样兄弟,解决了吗???????????

我用这个方法解决了

If you have not solved this problem, you can try searching solution in the Internet.

不使用xavier_uniform,居然test正确率从0.82掉到了0.64,单单一个初始化居然有这么大影响。。。

没太懂您的意思,您具体是指哪个问题?Q4吗?