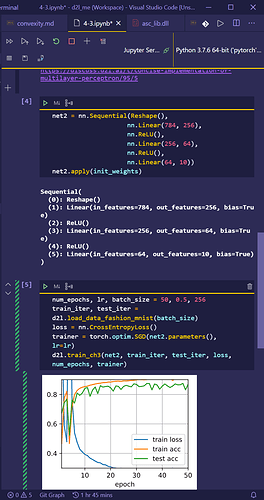

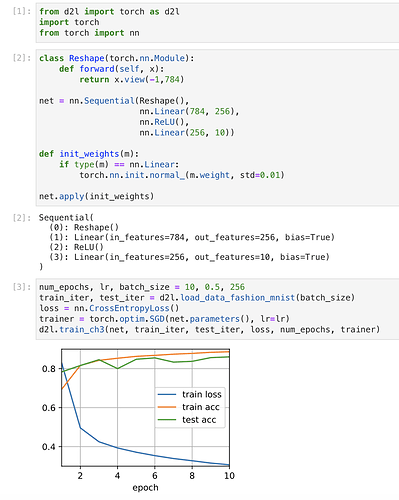

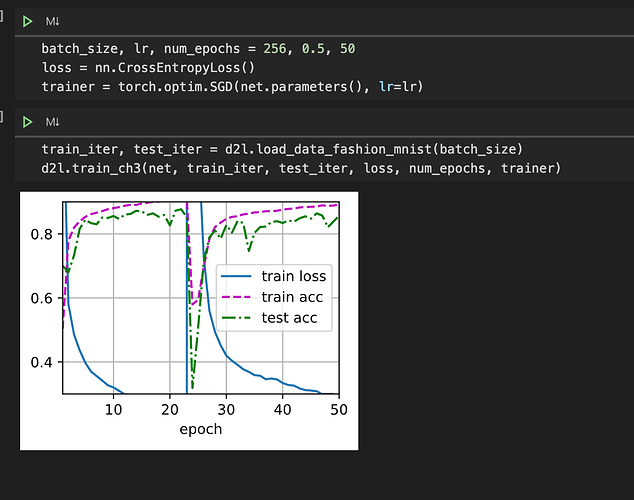

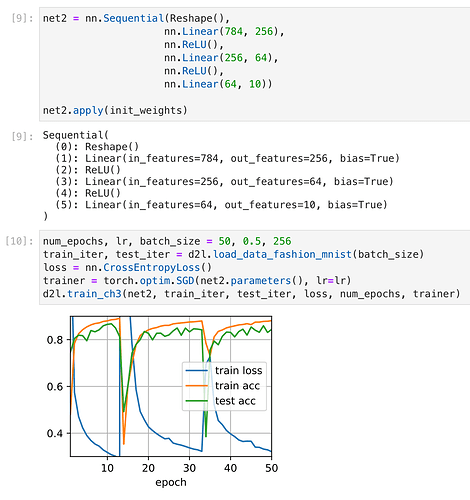

I was trying to tinker around with the number of layers and epochs. For example

net2 = nn.Sequential(nn.Flatten(),

nn.Linear(784, 256),

nn.ReLU(),

nn.Linear(256, 64),

nn.ReLU(),

nn.Linear(64, 32),

nn.ReLU(),

nn.Linear(32, 10)

)

def init_weights(m):

if type(m) == nn.Linear:

torch.nn.init.normal_(m.weight, std=0.01)

net2.apply(init_weights)

batch_size, lr, num_epochs = 256, 0.1, 100

loss = nn.CrossEntropyLoss()

trainer = torch.optim.SGD(net2.parameters(), lr=lr)

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

d2l.train_ch3(net2, train_iter, test_iter, loss, num_epochs, trainer)

print(‘Train Accuracy’,d2l.evaluate_accuracy(net2,train_iter))

print('Test Accuracy ',d2l.evaluate_accuracy(net2,test_iter))

d2l.predict_ch3(net2, test_iter)

Train accuracy comes around 0.87, Has anyone reached a training accuracy of close to 1.

(find some bugs and search on web)

(find some bugs and search on web)