I noticed that 2.5.2 does’t have PyTorch, so I tried to convert it.

I used “.detach()” and “.clone()” to simulate “.copy()” in MXNET.

But I’m confused with differences from “.detach()” and “.clone()”.

Which one is more like to “.copy()” in MXNET?

from torch.autograd import Variable

x = torch.arange(4.0, requires_grad=True)

# make it as a Variable with a gradient taken.

x = torch.autograd.Variable(x, requires_grad=True)

y = x * x

y.backward(torch.ones(y.size()))

AttributeError: ‘Tensor’ object has no attribute ‘copy’

# detach

u = x.detach()

# replace :u = torch.autograd.Variable(u, requires_grad=True)

# make tensor autograd works

u.requires_grad()

v = u * u

v.backward(torch.ones(v.size()))

x.grad == u.grad

tensor([True, True, True, True])

# clone

u = x.clone()

u.requires_grad()

v = u * u

v.backward(torch.ones(v.size()))

x.grad == u.grad

tensor([True, True, True, True])

For more:

Links that I have searched:

2.5.4.

“Consequently d / a allows us to verify that the gradient is correct.”

I didn’t understand the meaning of d / a.

So I code as follow. But now, I’m more confused.

b = torch.randn(size=(1,), requires_grad=True)

b = b + 1000

# record the calculation

d = f(b)

d.backward()

b.grad == (d / b)

False

d tensor([1999.6416], grad_fn=)

b tensor([999.8208], grad_fn=)

t = b.grad

t

no return?

2.5.5.

The MXnet code of prediction mode haven’t mentioned.

I was wondering about the PyTorch’s code.

-

I’m not very clear. Maybe second derivative is more easy to overflow the scope of storages.

y.backward()

RuntimeError: Trying to backward through the graph a second time, but the buffers have already been freed. Specify retain_graph=True when calling backward the first time.

y = 2 * torch.dot(x, x)

y

y.backward(retain_graph=True)

y.backward(retain_graph=True)

no Error!

- Error happened!

# matrix

a = torch.randn(20, requires_grad=True).reshape(5, 4)

d = f(a)

d.backward()

RuntimeError: grad can be implicitly created only for scalar outputs

analyze:

source code:~.conda\envs\pytorch\lib\site-packages\torch\autograd_init_.py in _make_grads(outputs, grads)

rows:from 32 to 35 :

elif grad is None: # why grad is None? Does f(a) not support matrix?

if out.requires_grad:

if out.numel() != 1:

raise RuntimeError("grad can be implicitly created only for scalar outputs")

-

TODO:

-

I’m trying to import plot in 2.4, so I convert the “2-4.ipynb” to “two-four.py”.

But when I import it, it just happens as follow.

How to fix it?

from ..d2l import torch as d2l

from IPython import display

import numpy as np

from .two_four import plot

ImportError Traceback (most recent call last)

in

----> 1 from …d2l import torch as d2l

2 from IPython import display

3 import numpy as np

4 from .two_four import plot

ImportError: attempted relative import with no known parent package

TODO:

Can you explain what exactly is the doubt here?

To import -> from d2l import torch as d2l you first need to install the d2l package in your environment.

The first reply is my 2.5.2’s pytorch code. I use “detach” and “clone” to simulate what “copy” do in MXNET. But which one is best, “detach” or “clone”?

The second reply is about "the meaning of d / a " in 2.5.4. I tried another “b”.The return of “b.grad == (d / b)” is false rather than true. why?

The third reply is my answer to 2.5.7. I’m still working on how to import plot in 2.4.

After running pip install -U d2l -f https://d2l.ai/whl.html,

I can directly run from d2l import torch as d2l

Thanks

But I’m confused about the bug when I directly run the imtorch.py(rename from d2l/torch.py)

$ /usr/bin/env python "d:\onedrive\文档\read\d2l\d2l\imtorch.py"

Traceback (most recent call last):

File "d:\onedrive\文档\read\d2l\d2l\imtorch.py", line 22, in <module>

import torch

File "C:\ProgramData\Anaconda3\lib\site-packages\torch\__init__.py", line 81, in <module>

ctypes.CDLL(dll)

File "C:\ProgramData\Anaconda3\lib\ctypes\__init__.py", line 364, in __init__

self._handle = _dlopen(self._name, mode)

OSError: [WinError 126] The specified module could not be found

Answer to first question.

tensor.detach() creates a tensor that shares the same storage with tensor that does not require grad.

But tensor.clone() will also give you original tensor’s requires_grad attributes. It is basically an exact copy including the computation graph.

Use detach() to remove a tensor from computation graph and use clone to copy the tensor while still keeping the copy as a part of the computation graph it came from.

The second answer about "the meaning of d / a " in 2.5.4.

“a.grad == (d / a)” is true because if you see how d is calculate using f(a). It is basically scaling a by some constant factor k. And if you were to do differentiate such a function say function d=k*a with respect to a then you would get that k. Hence these are true and obviously it won’t hold for b because d is a function of which scales a and not b.

Answer to third question.

As i suggested earlier and you probably did it, you can simply pip install d2l to import torch from d2l.

If you want to import the specific rename imtorch.py just add this to the start of your code before making the import. ->

import sys

sys.path.insert(0, "../")

Let me know if these answers solve your issue.

Q1.

Does it mean that if I ‘clone’ a tensor or a Variable that has requires_grad attribute, then I don’t need to .requires_grad() for the new one?

Use detach() to remove a tensor? However, why can I still judge x.grad() == u.grad? “Remove” doesn’t mean that x will not exist? I think that x and u are just two different names for the same storage.

Can my code about 2.5.2’s Variable add to 2.5.2?

Q2.

I have understand that d is a function of which scales a. But what difference with f(b)?

Because, I think that f(b) = 2 * b.

- First, b(formal parameter) =2 * a (argument b).

- Then, not enter the loop. (b > 1000)

- Then, True for if, return b(formal parameter) which is 2 * b(argument)

Q3.

Thanks. I will try it next time. I have did pip install d2l

The specified module could not be found

Did the problem happen because the module doesn’t exist in my sys.path?

Ans1.

Yes we don’t use Variable in PyTorch now. We can use Tensor to do everything a variable did earlier with the latest version.

Ans2.

Yes, f(b) will be 2*b and if you change it to the following you will get True.

b = torch.randn(size=(1,), requires_grad=True)

d=f(b)

d.backward()

b.grad == d/b

>>>True

Ans3. Just uninstall the pip version and run python setup.py develop in your root d2l-en directory for installing the library.

Ans3.

Just run this python setup.py develop in your repository.

Q2.

b = b + 1000

Why does it make False?

I found that print(b.grad) is None?

Why did it happen?

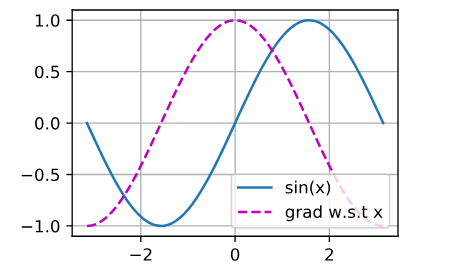

my solution to question 5

import numpy as np

from d2l import torch as d2l

x = np.linspace(- np.pi,np.pi,100)

x = torch.tensor(x, requires_grad=True)

y = torch.sin(x)

for i in range(100):

y[i].backward(retain_graph = True)

d2l.plot(x.detach(),(y.detach(),x.grad),legend = ((‘sin(x)’,“grad w.s.t x”)))

looks dummy since I compute the grad 100 times, is there any better way?

I don’t quite understand 2.5.2.

My understanding is that by default the framework will convert the actual output matrix into a vector based on a “gradient vector” that we passed in. The description of this “gradient vector” is

which specifies the gradient of the differentiated function w.r.t

self.

What does it mean? Does “gradient of differentiated function” mean “second order gradient”?

My answers to the questions: please point out if I am misunderstood anything

- Why is the second derivative much more expensive to compute than the first derivative?

Because instead of following the original computation graph, we need to construct a new one that corresponds to the calculation of first-order gradient?

- After running the function for backpropagation, immediately run it again and see what happens.

RuntimeError: Trying to backward through the graph a second time, but the saved intermediate results have already been freed. Specify retain_graph=True when calling backward the first time.

- In the control flow example where we calculate the derivative of

dwith respect toa, what would happen if we changed the variableato a random vector or matrix. At this point, the result of the calculationf(a)is no longer a scalar. What happens to the result? How do we analyze this?

Directly changing would give the “blah blah … only scalar output” which is what 2.5.2 is talking about. I changed the code to

a = torch.randn(size=(3,), requires_grad=True)

d = f(a)

d.sum().backward()

and a.grad == d / a still gives true. This is because in the function, there is no cross-element operation. So each element of the vector is independent and doesn’t affect other element’s differentiation.

- Redesign an example of finding the gradient of the control flow. Run and analyze the result.

SKIP

- Let $f(x) = \sin(x)$. Plot $f(x)$ and $\frac{df(x)}{dx}$, where the latter is computed without exploiting that $f’(x) = \cos(x)$.

import numpy as np

from d2l import torch as d2l

x = np.linspace(- np.pi,np.pi,100)

x = torch.tensor(x, requires_grad=True)

y = torch.sin(x)

y.sum().backward()

d2l.plot(x.detach(),(y.detach(),x.grad),legend = (('sin(x)',"grad w.s.t x")))

Hi @SONG_PAN, this is a first order gradient. The gradient is only available after we differentiate the function. ![]()

Hi, May I confirm my understanding about the auto differentiation of Python control flow?

I assume, as long as the Python control flow is established by functions and variables from Pytorch, then the auto differentiation is doable. Am I right on this? No other functions should be involved such as sin() from math package(have to use sin() from Pytorch instead if I want auto differentiation).

I guess you can replace

for i in range(100):

y[i].backward(retain_graph = True)

with the following sentence:

y.sum().backward(retain_graph = True)

Anyone know how the backpropagation of CNN and RNN works? Are there any step by step tutorials to show the derivation? Thanks!