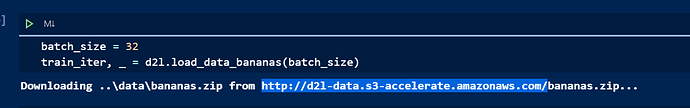

Is there some way to avoid downloading again bananas.zip which have downloaded in last section

Or in another word, choose to read local directory data\.. first?

where do you run the code?

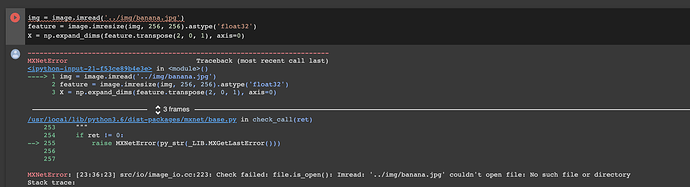

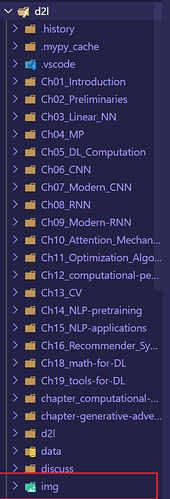

You need to download img in github.com/d2l-ai/d2l-en

Then put it like this.

@manuel-arno-korfmann

Hi please help me, where to get this formula:

1\times 2+(3-1)+(3-1)=6

Hi @iotsharing_dotcom, i suspect your mean 13.7.1.4, right? (please state the reference section next time ;)). This formula is coming from 7.3. Padding and Stride — Dive into Deep Learning 1.0.3 documentation.

Hi, why do we need class_pred in npx.multibox_target( anchors, Y, cls_preds.transpose(0, 2, 1)) while we can get bbox_labels, bbox_masks, cls_labels using anchors obtained by net and ground truths Y?

Hi @rezahabibi96, fantastic question! First, let’s clarify what does cls_preds refer to. Basicly cls_preds represents the (classes+1) outputs of each anchor box. Hence, it connects the anchors and the true label Y. And the bbox_masks derive from this connection. Check more details of multibox_target.

Hi, thank you! Yes I know cls_pred contains every (num_class + background) score for each generated anchor box. If I am not mistaken, for every anchor box, it will be computed to every ground truth using Jaccard, right?

Let’s say anchor Ai is matched with GT Bj and satisfy certain threshold, then Ai will be labelled according to Bj label and it will be given 1 in bbox_mask (positive).

And let’s also say anchor Am is matched with GT Bn but does not satisfy certain threshold, so Am will be labelled 0 (background or non contain any object) and will be given 0 in bbox_mask (negative). So where does cls_pred in here take action?

From my understanding, cls_pred is used to compute the confidence loss with cls_label, but I do not see why it is being used in here multibox_target? It is like I have some hole in my understanding. So sorry if I am asking too much. Thank you for your patience.

We don’t calculate IoU for every anchor. By original SSD paper: “Our SSD is very similar to the region proposal network (RPN) in Faster R-CNN in that we also use a fixed set of (default) boxes for prediction, similar to the anchor boxes in the RPN.” Faster R-CNN used non-maximum suppression to remove similar anchor box results.

The cls_pred is used to calculate the bbox_masks. The bbox_masks removes negative anchor boxes and padding anchor boxes from the loss calculation.

Don’t be sorry for asking great question! You are always welcome! If you still feel confusing, let me know. (While I highly suggest you read the original paper ![]() )

)

@goldpiggy:

We don’t calculate IoU for every anchor. By original SSD paper: “Our SSD is very similar to the region proposal network (RPN) in Faster R-CNN in that we also use a fixed set of (default) boxes for prediction, similar to the anchor boxes in the RPN.” Faster R-CNN used non-maximum suppression to remove similar anchor box results.

Hi, I have read the paper, yes you are right and I am sorry for my wrong understanding, they don’t calculate IoU for every anchor. What right is “for each GT, they find the best anchor using Jaccard, it is stated in Matching Strategy”.

And for the cls_pred, because most of anchor is negative, so it will surely effect the model learning (they will try to predict the negaitve box instead of the positive box), then SSD try to dismiss the negative anchor to mitigate it, so here the cls_pred is used in multibox_target to compute the bbox_mask as you said previously, it is also stated in Hard negative mining on the paper. Thank you so much @goldpiggy. Now it is clear ![]() .

.

I will surely be asking many question in the future, please don’t get tired to answer mine.

but why the masks is not applied on cls loss but offsets loss?

second ,i think there is enough info to compute such a mask against anchors and Y, right?

@goldpiggy

Hi!

Can you guide me on how I can put my own images instead of the example images in this code?

Upload your image to google drive, then mount google drive to google colab by this code:

from google.colab import drive

import os

drive.mount("/content/gdrive") #You can see google drive have mount by click icon folder on left side of google colab

os.chdir("/content/gdrive/MyDrive/Colab Notebooks/") #Alter this with your own path, this will move your current directory.

Hope your can do it.