self.P[:, :, 1::2] = torch.cos(X) in section 10.6.3 breaks if encoding_dim is odd number.

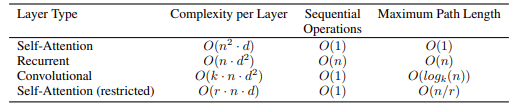

This chapter says that the the maximum path length of CNN is \mathcal{O}(n/k). I think it should be \mathcal{O}(log_k n)(as below)

This blog also talks about the maximum path length:https://medium.com/analytics-vidhya/transformer-vs-rnn-and-cnn-18eeefa3602b

@Zhaowei_Wang O(log_k(n)) is the case of dilated convolutions, while the chapter discusses regular convolutions.

According to the description " Since the queries, keys, and values come from the same place, this performs self-attention", maybe the formula 10.6.1 should be y_i = f(x_i, (x_1, y_1), (x_2, y_2), …)? In my opinion, authors may make mistakes here.

I think you are correct.This is very likely author makes a mistake here. CNN can be regarded as a K-ary tree, where the number of leaf nodes is n $K^h=n$. So the maximum path is $O(log_k(n))$.

@chgwan In Fig. 10.6.1, we need a path of length 4 (hence 4 convolutional layers) so that the last (5th) feature in the last layer can have a route to the first feature x_1. I guess the exact formula is math.ceil((n-1) / (math.floor(k/2))), which is O(n/k).

which the term “parallel computation” in this post means? does it means that all tokens in a sequence is computed at one?

I wonder why positional encodings have to be added into X? Why not concatenation? And why it still works with addition?

but I think it’s floor( n/k). eg. floor(5/3)=2, floor(7/3)=3, but it doesn’t work if n is even while k is odd