https://d2l.ai/chapter_natural-language-processing-pretraining/word2vec-pretraining.html

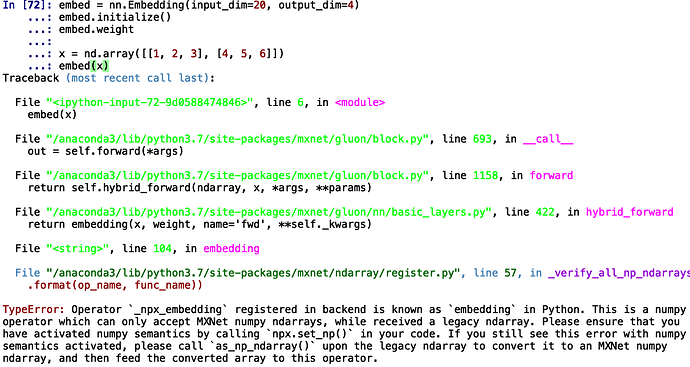

I don’t know what it exact means , if i convert a list it remind me to convert a ndarray ,but i have transfer it into ndarray ,then reminds me of what it is in the picture , appreciate for reply.

i have solved this by add ‘as_nd_nparray’ behind ‘np.array(…)’,but why can’t direct convert numpy array as input

I have two questions for help…

run this notebook on a mxnet-gpu conda env, traning result is:

loss 0.371, 80766.9 tokens/sec on gpu(0)

run the same notebook on pytorch conda env, got

loss 0.373, 279056.6 tokens/sec on cuda:0

The hyperparameters (and cuda) are the same and I don’t kown why is the speed so different。 how to improve the training speed when using mxnet(gpu)?

I followed the instructions of Exercises 1, set sparse_grad=True in nn.Embedding,

net.add(nn.Embedding(input_dim=len(vocab), output_dim=embed_size, sparse_grad=True),

nn.Embedding(input_dim=len(vocab), output_dim=embed_size, sparse_grad=True))

but got an ValueError:

----> 2 net.initialize(ctx=device, force_reinit=True)

…

ValueError: mxnet.numpy.zeros does not support stype = row_sparse

Thanks in advance

Hi @porrige, great question! It is pretty common to see one library perform at much better speed compared with another library. This may mainly due to the former has some optimization applied on some operators.

I don’t quite understand why the i-th row of the weight matrix after training can be treated as embedding of the i-th word in the vocabulary. Is there anyone could give a deeper insight?