http://d2l.ai/chapter_convolutional-neural-networks/pooling.html

Hi, this is a great chapter on pooling, but I think it could be made more comprehensive by also stating the explicit formula for the output dimensions just as was done for convolutions, padding and strides. What do you think?

Hi @wilfreddesert, we would like to hear more about your feedback. Could you post a suggestion/PR on how shall we improve? Please feel free to contribute!

This probably wasn’t done b/c pooling simply collapses the channel dimension

Hi! Thank you for this great book. I have a question from this section, namely from the third paragraph, where it reads:

For instance, if we take the image

Xwith a sharp delineation between black and white and shift the whole image by one pixel to the right, i.e.,Z[i, j] = X[i, j + 1], then the output for the new imageZmight be vastly different.

Shouldn’t it be one pixel to the left? If we want to shift the whole image one pixel to the right, then the correct equation should be Z[i, j] = X[i, j - 1], right?

This is not true. The pooling operation is applied to each channel separately, resulting in a tensor with the same number of channels as the input tensor, as described in the book.

At each step of the pooling operation, the information contained in the pooling window (i.e. the values of all the pixels inside the window) is “collapsed” in a single pixel; this is similar to what a convolution layer does, with a single difference: the pooling works on each channel separately, thus preserving the number of channels in the output (while the convolution layer with a 3-dimensional kernel sums over all the channels and “collapses” the channel dimension in the output). Thus, the formula you’re looking for is the same formula that was introduced when dealing with a convolutional layer, but replacing the kernel shape with the shape of the pooling window and keeping the channel dimension.

Yes, I’m aware. “Reduce” might have been better semantics than “collapse”. Probably good that we’re clarifying this however.

Hi,

My opinions just for reference :

I think what “shift the whole image” means here is “shift the image capturing window” instead of image itself since shifting image itself by some pixels is somewhat hard to explain/imagine. If so, what do we do for the blank ?

Exercises and my answers

-

Can you implement average pooling as a special case of a convolution layer? If so, do it.

-

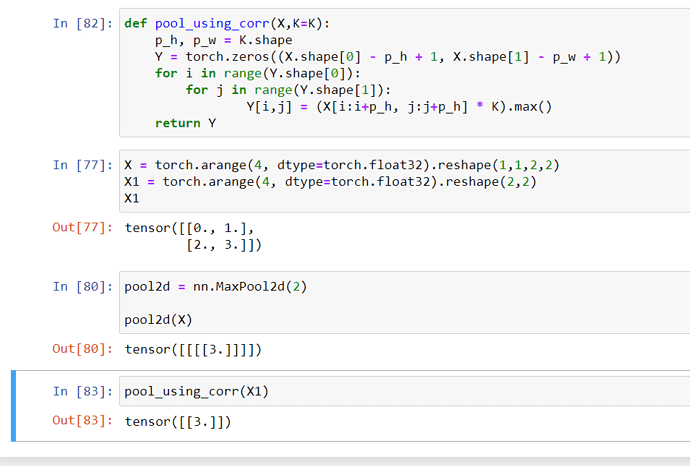

Can you implement maximum pooling as a special case of a convolution layer? If so, do it.

- It can be done but we need to modify the convolution layer, or find the special kernel. I couldnt so I modified the operation itself, not the right answer I guess.

- What is the computational cost of the pooling layer? Assume that the input to the pooling

layer is of size c×h×w, the pooling window has a shape of ph ×pw with a padding of (ph, pw)

and a stride of (sh, sw).

- I expect it to be (h- ph - sh)/sh * (w - pw -sw)/sw * len© * time taken to find max value.

- Why do you expect maximum pooling and average pooling to work differently?

- because max pooling will give maximum value from the neighbors while average pooling would consider all the neighbors. I expect max pooling to be faster.

- Do we need a separate minimum pooling layer? Can you replace it with another operation?

- This seems like a trick question, but one way is to multiply X by -1 and then do max pooling.

- Is there another operation between average and maximum pooling that you could consider

(hint: recall the softmax)? Why might it not be so popular?

- taking average of the log and then computing the maximum value devide by sum of log. It might be computationally intensive.

When do we use max pooling or avg pooling?

@thainq If our current layer has sparse features with sharp regions, max pooling will likely retain the patterns, whereas averaging will blur them.

for example in the ith layer how to know the feature map whether sparse or not?

@thainq By visualizing hidden features and filters, for example.

But if your question is how to know beforehand, my assumption is that it directly depends on data. How about experimenting with (Fashion)MNIST by first training LeNet and plotting learned representations? Try something like this:

import matplotlib.pyplot as plt

net = # trained LeNet or another CNN

image = # your image sample

fig, axes = plt.subplots(ncols=len(net), squeeze=False)

with torch.no_grad():

for i, layer in enumerate(net):

image = layer(image)

axes[0, i].imshow(image.numpy())

I have some trouble understanding the last exercise about using the softmax operator as a pooling operator. Softmax is a function going from R^n to R^n, whilst pooling operators spit out something in R. I believe this question is implicitly asking about doing a max after a softmax, but I don’t want to skip steps. Could you elaborate on what your meaning of softmax is? In the usual sense, it can’t be used to do any sort of pooling, if at most it’s more like a convolution.

Returning to the problem of edge detection, we use the output of the convolutional layer as input for 2 × 2 max-pooling. Denote by X the input of the convolutional layer input and Y the pooling layer output. Regardless of whether or not the values of X[i, j], X[i, j + 1], X[i+1, j] and X[i+1, j + 1] are different, the pooling layer always outputs Y[i, j] = 1. That is to say, using the 2 × 2 max-pooling layer, we can still detect if the pattern recognized by the convolutional layer moves no more than one element in height or width.

I’m completely unable to comprehend this paragraph. Any guidance or clarification would be greatly appreciated.

Exercise 3

- max(a,b) = ReLU(b-a) + ReLU(a) - ReLU(-a)