http://d2l.ai/chapter_convolutional-neural-networks/channels.html

It would be nice to have some discussion about using other color representations like HSV/HSL. Would it help to reduce the memory usage by eliminating one dimension of the kernel if we don’t have to aggregate RGB channels?

Hey @lregistros, great question! Actually RGB and HSV/HSL have defined conversion: https://en.wikipedia.org/wiki/HSL_and_HSV#To_RGB, which should be easily implemented

This part seemed to so tough to grasp! XO

Exercises

-

Assume that we have two convolution kernels of size k_1 and k_2, respectively (with no nonlinearity in between).

- Prove that the result of the operation can be expressed by a single convolution.

-

not sure

-

tried to code it unsuccessfully

- What is the dimensionality of the equivalent single convolution?

- dont know

- Is the converse true?

- dont know

-

Assume an input of shape c_i\times h\times w and a convolution kernel of shape c_o\times c_i\times k_h\times k_w, padding of (p_h, p_w), and stride of (s_h, s_w).

-

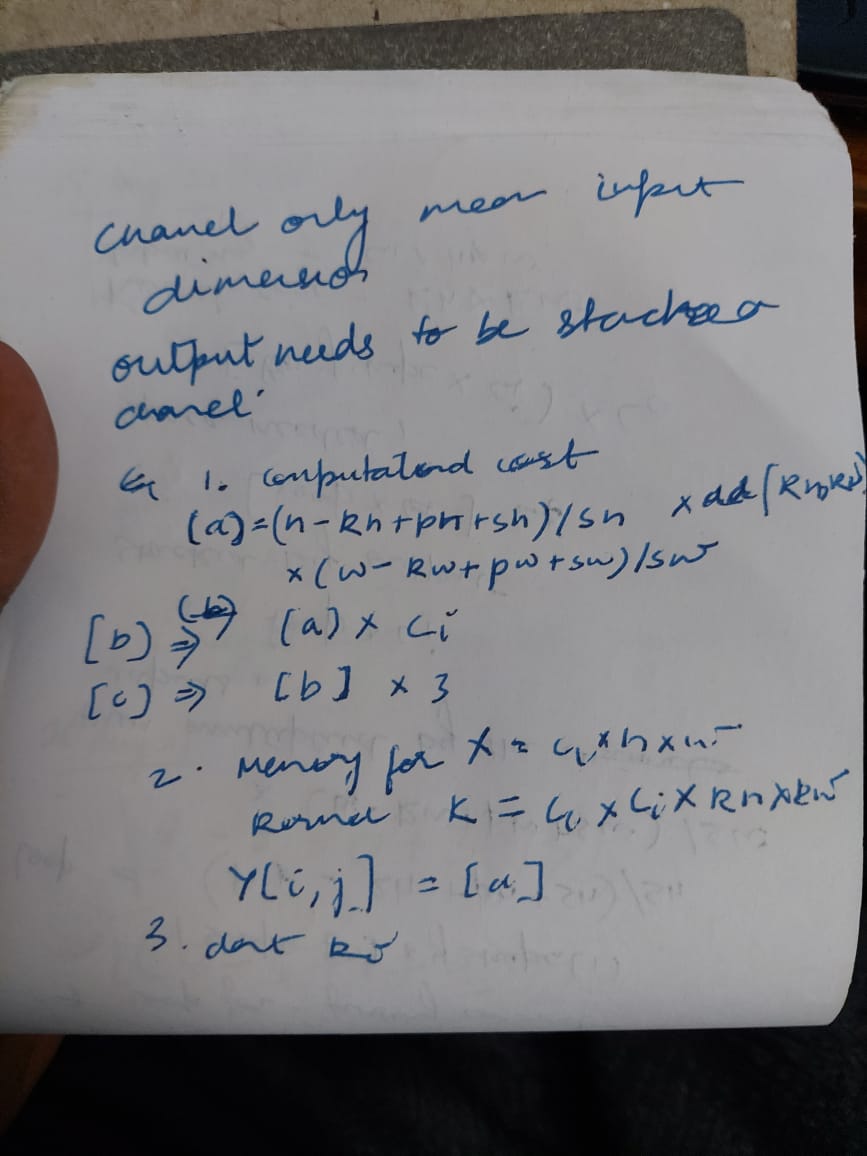

What is the computational cost (multiplications and additions) for the forward propagation?

-

What is the memory footprint?

- What is the memory footprint for the backward computation?

- dont know

- What is the computational cost for the backpropagation?

- dont know

-

-

By what factor does the number of calculations increase if we double the number of input channels c_i and the number of output channels c_o? What happens if we double the padding?

-

calculations would be multiplied by c_i and if c_o it would be multiplied by length of c_o

-

if we double the padding then calculation would be h-kh+ph* 2,w - kw + pw * 2

-

-

If the height and width of a convolution kernel is k_h=k_w=1, what is the computational complexity of the forward propagation?

- h - kh + 1, w - kw + 1 = h, w

-

Are the variables

Y1andY2in the last example of this section exactly the same? Why?- it cameout same for me !

-

How would you implement convolutions using matrix multiplication when the convolution window is not 1\times 1?

-

DONT KNOW.

-

here is my attempt

-

1.1) The convolution operation satisfies associativity: (I * K1) *K2 can be written as I * (K1 * K2), where I is an image and K1 and K2 are kernels.

1.2) The dimensionality of image I after applying two kernels one by one: (w - k1+1) - k2 +1 = w - k1 - k2 +2, where w is the size of image I

The above can be written as w-(k1-k2+1) -1, so the new combined kernel should have size k1+k2+1

1.3) Yes, since the convolution is a linear operation. A single operation can therefore be broken down into several suboperations.

I am a bit confused by 7.4.1, where we are adding the result for the convolution result of each layer. Isn’t that the same as a pixelwise addition and then do convolution on the new single channel?