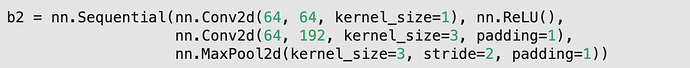

Hello, I find that there is no relu function after the second convolutional layer in module ‘b2’ in the pytorch version, is it missing?

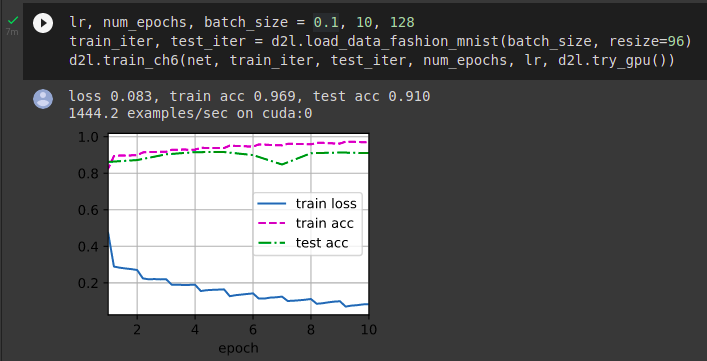

I am also confused to it so I try to find the answer in its original paper.

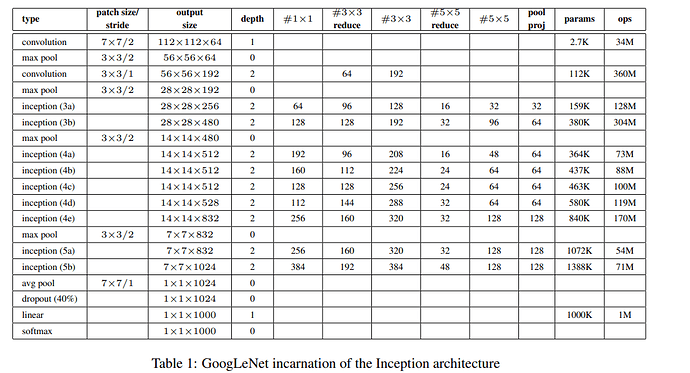

The structure of GoogLeNet like below:

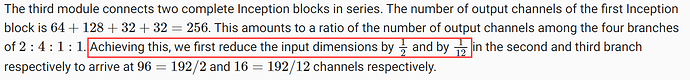

In the Insecetion(3a), #3*3 reduce is the input of second path, #3*3 is the output of second path. In this chapter, author only tell me the truth that This layer Input/Before layer Output=96/128=1/2, #5*5 reduce as the same. It hard to find rule because it changes to 1/2, 1/8 in the second Inception block.

If you want to know why structure like that, the paper seems do not give us a reason. I think it comes from a lot of try.

Last, I think it is more important to know the idea that we can use more conv(11, 33, 55) with less channel to replace directly using 55 or 3*3 conv.

A scale of 1/2 and 1/8 respectively suffices

Since the author used the word “suffices”, I think the number can be chosen empirically.