http://d2l.ai/chapter_generative-adversarial-networks/gan.html

http://preview.d2l.ai/d2l-en/master/chapter_generative-adversarial-networks/gan.html

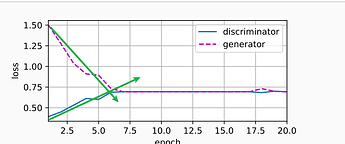

The loss of discriminator is better to be bigger.

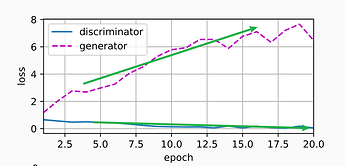

The loss of generator is better to be smaller.

I can’t figure out a question: https://stackoverflow.com/questions/63763835/why-is-my-loss-of-generator-0-why-is-loss-of-discriminator-bigger-than-generato

Anyone helps me?

Hi @StevenJokes, we want to minimize both loss. Can you specify where do you see the following augment? Thanks

GAN:

DCGAN:

I forgot the book name… Too many books I have read…

I found 《深入浅出PyTorch:从模型到源码》writen by 张校捷

GAN: PyTorchIntroduction/Chapter4/gan.py at master · zxjzxj9/PyTorchIntroduction · GitHub

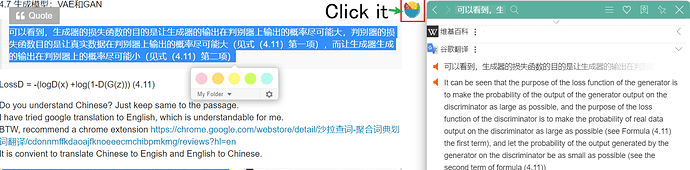

4.7 生成模型:VAE和GAN

可以看到,生成器的损失函数的目的是让生成器的输出在判别器上输出的概率尽可能大,判别器的损失函数目的是让真实数据在判别器上输出的概率尽可能大(见式(4.11)第一项),而让生成器生成的输出在判别器上的概率尽可能小(见式(4.11)第二项)

LossD = -(logD(x) +log(1-D(G(z))) (4.11)

Do you understand Chinese? Just keep same to the passage.

I have tried google translation to English, which is understandable for me.

BTW, recommend a chrome extension Saladict - Pop-up Dictionary and Page Translator - Chrome Web Store

It is convient to translate Chinese to Engish and English to Chinese.

Will your team give me an AI job now?

I really need to learn AI from working with you…

Would you like to train me…