Well, then we will have to change the limit we sum over from (k,l) to (i+a, j+b)!

We now conceive of not only the inputs but also the hidden representations as possessing spatial structure.

So does this mean that each perceptron in our hidden layer is two-dimensional much like our input image?

Also, why does our weight matrix W have to be a 4th order tensor? If our input X is a two dimensional matrix and we are multiplying W with it, shouldn’t W be a two dimensional matrix as well?

Hi @Kushagra_Chaturvedy, great question! Here we assume we have (𝑘,𝑙) neurons, and each neuron has “i x j” weights. These weights can be in 1d or 2d dimension. If we reshape them to 2d, that will be the convolution kernel. Let me know if it makes sense to you!

Hi,

In equation 6.1.1, weight has been indexed with i, j, k, l and X has been indexed with just k and l. However, X is the input and based on which H[i][j] is produced, thus, shouldn’t the X be indexed with i and j. And, further computation is centered on ith and jth of X. Thus, I suppose the indexing of W and X should be other way around.

Thanks,

Jugal

What does it mean to have (k, l) neurons? and each neurons has “i*j” weight? and why setting k = i+a and l=j+b but we don’t change the limit of the sum? that is we should have W(i, j, i + a, j + b)*X(i + a, j + b), sum over k = i +a and l = j + b

Hi @goldpiggy, can you please clarify your comment? What does it mean to have (k,l) neurons? And it seems to me that what @jugalraj is asking also makes sense. Could please take another look at 6.1.1? There is either a typo there or probably the explanation should be a bit more detailed. Thanks!

@Kushagra_Chaturvedy @wilfreddesert @bergamo_bobson

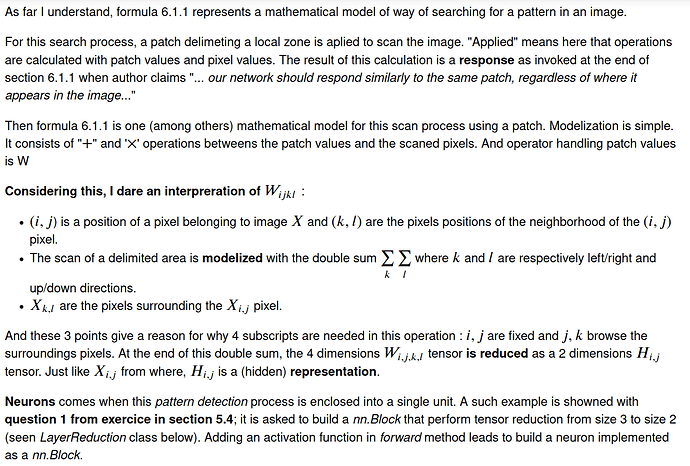

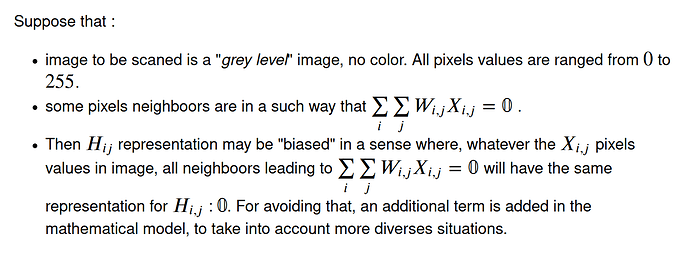

I may have an interpretation considering tensor dimensions.

There is a non evident connection with neurons, as explained at the end of the screenshot below.

class LayerReduction(nn.Block) :

def __init__(self, t_input, weight_scale=10, **kwargs) :

super().__init__(**kwargs)

self._dim_i ,self._dim_j, self._dim_k = t_input

#Weight initialization

self._weight = self.params.get('weight', shape=t_input)

self._weight.initialize(init = init.Uniform(scale=weight_scale))

#Reduced weights initialization

self._reducedWeight = self.params.get('weightReduced', shape=self._dim_k)

self._reducedWeight.initialize(init = init.Uniform(scale=0))

# Can also apply

#self._reducedWeight.data()[:] = 0.

def forward(self, X) :

'''

Calculates tensor reduction with following steps :

* Step 1 : A_ik = Sum_j W_ijk . X_j

* Step 2 : Sum_i A_ik . X_i

INPUT :

* X : (i,j)

OUTPUT : array of dimension k

'''

Xi = X[:self._dim_i]

Xj = X[self._dim_i:self._dim_i+self._dim_j]

Wki = np.zeros((dim_k,dim_i))

for k in range(dim_k) :

Wk_ij = self._weight.data()[:,:,k] #(i,j)

Wki[k,:] = np.dot(Wk_ij,Xj)#(k,i)

Wki.shape, Xj.shape

self._reducedWeight.data()[:] = np.dot(Wki,Xi)

return self._reducedWeight.data()

Test of LayerReduction

t_input = (2,3,4)

dim_i, dim_j, dim_k = t_input

net = LayerReduction(t_input)

X = np.random.normal(0.,1.,(dim_i+dim_j))

print(net(X))

yeah, i think we should know the underlying theories instead of the direct results.

- from 6.1.1 we know that k,l are not relevant to i,j but in subsequent equation we know that a,b are as offsets to i,j. these mean that a,b now are relevant to i,j.

I think this is correct IFF the a,b are both from -infinite to infinite. - what is the real meaning about from W to V except subscripts? only for a rewriting as filter?

- why is it necessary that for both X,H have same shape in 6.1.1?

but from the view of FC layer in MLP, both k,l should cover the whole image. this means that k,l should not be the adjacent elements of i,j

I agree, in case of equivalence with MLP processing, then H is a representation of X with same shape (same area).

Re-writing 6.1.1 with introduction of subscripts (a,b), allows to consider sums with bounds. Those bounds depends of size of a and b as shown in 6.1.3; then (a,b) both can be regarded as distances around (i,j) and they can now be interpreted as geometric limits, say, a neighborhood of (i,j).

Due to area calculation restriction, “filter” term can now take place.

And as the section title claims, “Constraining MLP”, same calculations then MLP take place but on an arbitrary restricted area.

On my point of view, if calculations are restricted to a neighbourhood of (i,j), then W is no more equals to V. I think that if it is supposed that H and X have the same shape, after restriction of calculation to a neighbourhood of (i,j), it won’t be naymore the case.

sorry, i am not clear yet about the ‘same shape’ problem.

for instance ,an MLP with an image (10x10,ie. total 100 features) as input ,then the direct hidden layer is (4x4,ie. 16 units). how do you detailedly describe the ‘same shape’? does it only mean same spatial structure(ie. two dimensions) ?

in formula 6.1.1 ,it’s no doubt about the meaning of k,l(k=0,1,…9; l=0,1,…9) since each unit in hidden layer must sum over all input features with own weights,so k,l are not relevant to i,j(ie. public factors for hidden layer). but how about a,b now ?

thanks

I agree, for FC hidden layer, each unit has its own weights and, as fare I understand your purpose, then weights calculation leads to values that are independant from each pixels position indexed with (i,j).

But this is same when applying a translation transformation over subscripts with (a,b);

Reason is that when feeding 2D image into an MLP estimator, input layer is flattenered as a vector.Browsing dimensions with (k,l) or (a,b) leads to same results for weights. Such representation leads to same weights calculation.

For shapes of input data and its representation from hidden layer :

when hidden layer has same number of nodes then pixels into input layer, then H, the representation of X into hidden layer, is regarded as having same dimension (same number of pixels) then X, input image.

When this number of units is less, H is regarded as a dimension reduction of input X

when number of units is greater then number of pixels from X, H is regarded as dimension projection in a greater space (analogy could be set with kernel trick).

I think that may be, the inroduction of (a,b) allows to introduce concept of neighbourhood and then, concept of feature map in which (k,l) becomes dependant (relevant) from (i,j) when weights are shared among each node from hidden layer.

The fourth dimension in eq 6.1.7 is batch size I guess. As the input tensors are of three dimention with height * width * channel. So to represent the equation with four variable it must be the batch size.

In formula 6.1.7, could we modify it to have bias term included? I have been confused about the index of bias term when multiple channels are involved in the convolutional layer.

What does a translation variant image mean? is it a video?

Well I don’t think the forth dimension represents the batch size. The idea is that you can have multiple kernels applied to the same image and have multiple layers. So you have H x W x C (Height width channels) as input from the image than the output is a hidden layer that has D channels (this represents the number of kernels you apply to the image) So in the begging the channels are the colors RGB and afterwards you get a channel that might represent vertical edges horizontal edges corners etc. they get more abstract. The forth dimension in the case says how many abstract channels you get in the hidden layer.

PS Usually you don’t have a separate set of weights for each example in the batch. You are training one model.

Not sure if anyone uses this term.

So invariant means that something doesn’t change if some action is applied. So if you roll 5 dices the sum depends on the value of each die but not on the order in which they were rolled, so you might say the sum is order invariant. If you play a game where you win if your second roll is greater than the first this is no longer order invariant, so you might say it is order ‘variant’. I don’t think that anyone does that though.

A case when you might not want your network to the model to have translation invariance is in the setup of controlled environment where you have a prior knowledge of the expected structure of the image.

Assume that the size of the convolution kernel is Δ=0. Show that in this case the convolution kernel implements an MLP independently for each set of channels.

I can see how this is a MLP on pixel level but not on the channel level. In this case you can think of each pixel(all channels) as an example and the whole image as the batch. Please correct me if I’m wrong.

If we use Δ=0, then to calculate each H[i, j, :] only (i, j) pixel will be used. But the last dimension (new channels of H) will be computed as a H[i, j, d] = (Sum over all channels) V[0, 0, c, d] * X[i, j, c]. So basically you are computing a linear regression for each channel d using c channels of the (i, j) pixel. And because there may be multiple d channels, it is a linear layer. If we add following activation function and another layers we get an MLP.