I find hotdog is 934 in ImageNet

in Exercises4:

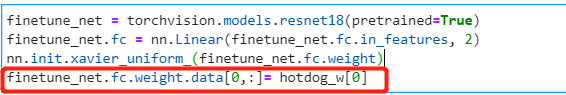

in my opinion, we can copy the hotdog_w into the parameters of fc.

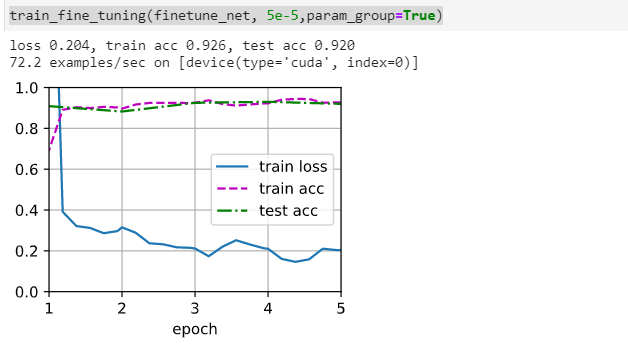

when i use xavier_uniform_ only, the training process is

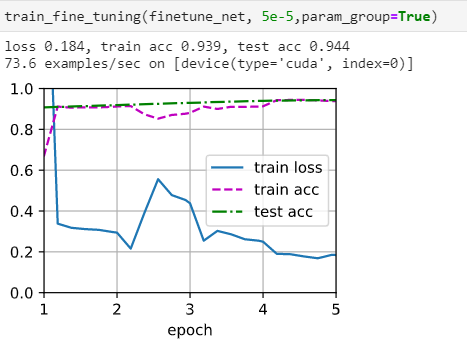

when i copy hotdog_w into fc in the begining of training, the loss is smaller than before.

Hi @min_xu, I was wondering why we update finetune_net.fc.weight.data[0 : , ] to hotdog_w[0] and not finetune_net.fc.weight.data[1 : , ] to hotdog_w[0]. Shouldn’t the 0th index correspond to label 0 and index 1 correspond to label 1 (which indicates that the image contains a hotdog)

How would we go about implementing fine-tuning for a pretrained model that doesn’t support .fc?

I’ve been using alexnet and that doesn’t support .fc

im trying to implement vgg16 to finetune, i have changed the classifer[0] for the linear equation. however i keep getting runtime errors saying CUDA is out of memory, is there any other values to change besides making the batch size smaller