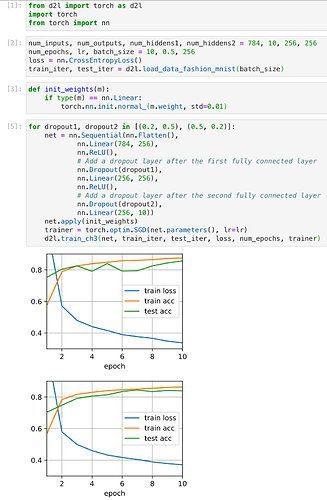

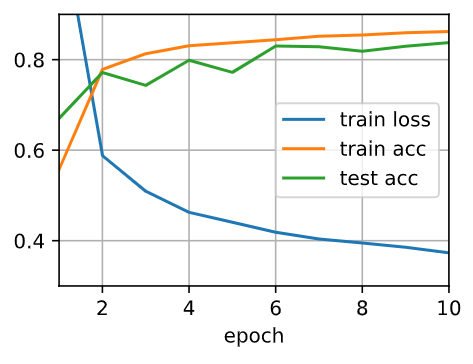

Two pics in 4.6.4.3. Training and Testing and 4.6.5. Concise Implementation are so different?

4.6.7. Exercises

- I have tried to switch the dropout probabilities for layers 1 and 2 by switching dropout1 and dropout2.

Sequential(

(0): Flatten()

(1): Linear(in_features=784, out_features=256, bias=True)

(2): ReLU()

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=256, out_features=256, bias=True)

(5): ReLU()

(6): Dropout(p=0.2, inplace=False)

(7): Linear(in_features=256, out_features=10, bias=True)

)

trainer = torch.optim.SGD(net.parameters(), lr=lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

But how can I confirm that the change’s cause is my action of switching the dropout probabilities other than random init?

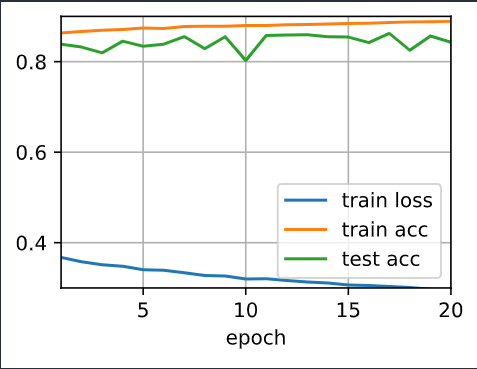

num_epochs = 20

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

d2l.train_ch3? Does it train continously? I guess so.