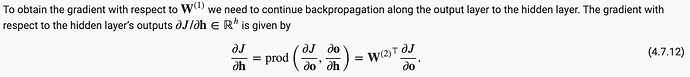

I can understand equation 4.7.12, as shown below, and the order of the elements in the matrix multiplication makes sense, i.e. the derivative of J w.r.t. h (gradient of h) is the product of the transpose of the derivative of o w.r.t. h (which is W2 in this case) and the derivative of J w.r.t. o (i.e. the gradient of o).

我可以理解等式 4.7.12, 也就是说,损失J对h的偏导数,也即h的梯度,等于h对于o的偏导数的转置与损失对于o的偏导数,也即o的梯度的乘积。

This is consistent with what Roger Grosse explained in his course that backprop is like passing error message, and the derivative of the loss w.r.t. a parent node/variable = SUM of the products between the transpose of the derivative of each of its child nodes/variables w.r.t. the parent (i.e. the transpose of the Jacobian matrix) and the derivative of the loss w.r.t. each of its children.

这与Roger Grosse的课程里对反向传播的解释是一致的,也就是说,反向传播是在传递误差信息,而损失对于当前父变量的导数,也即该父变量的梯度,等于所有其子变量对父变量的导数的转置,也即雅可比矩阵的转置与损失对于相应子变量的导数,也即子变量的梯度的乘积之总和。

However, this conceptual logic is not followed in equations 4.7.11 and 4.7.14 in which the “transpose” part is put after the “partial derivative part”, rather than in front of it. This could lead to problem in dimension mismatching for matrix multiplication in code implementation.

但似乎这一概念逻辑在等式 4.7.11和4.7.14中并没有被遵从,其中的雅可比矩阵转置的部分被放在了损失对子变量的导数的后面。这会导致在矩阵乘积运算中维度不匹配的问题。

Could the authors kindly explain if this is a typo, or the order in matrix multiplication as shown in the equations doesn’t matter because it is only “illustrative”? Thanks.

所以,请作者们解释一下,这是否是文字排版错误,抑或这个“顺序”在这些作为概念演示的等式里并不重要? 谢谢。