https://d2l.ai/chapter_linear-classification/softmax-regression-concise.html

Hi @mli I get this error when I import the libraries needed for this module:

from d2l import mxnet as d2l

from mxnet import gluon, init, npx

from mxnet.gluon import nn

npx.set_np()

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

<ipython-input-2-2bc514450bf4> in <module>

----> 1 from d2l import mxnet as d2l

2 from mxnet import gluon, init, npx

3 from mxnet.gluon import nn

4 npx.set_np()

ImportError: cannot import name 'mxnet' from 'd2l' (/Users/xyz/miniconda3/envs/d2l/lib/python3.7/site-packages/d2l/__init__.py)

Is the first line for import correct? I see this error at least in all notebooks for the linear networks module

I think it should be just:

import d2l

Hi @S_X, I run the code multiple times and it goes through smoothy. Please check if you have d2l installed by running

!pip list | egrep d2l

in your jupyter notebook. It should return you the current version of d2l, such as d2l 0.13.2.

If not, please run

!pip install d2l

in your jupyter notebook.

Hi @goldpiggy,

It worked fine for me last week. I then downloaded the latest version of d2l notebooks and this problem showed up. The issue is:

from d2l import mxnet as d2l

it should be

import d2l

In from d2l import mxnet as d2l I don’t think the intent is to import mxnet library from d2l and name it as d2l! Please correct me if I’m mistaken.

Please update to the latest version of d2l by pip install d2l==0.13.2 -f https://d2l.ai/whl.html. We updated the way to import d2l to support multiple backends. from d2l import mxnet as d2l imports the mxnet backend.

Thanks! That helped!

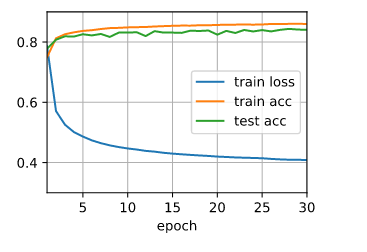

For the question #2 at the end:

Why might the test accuracy decrease again after a while? How could we fix this?

When I run 30 epochs, I see a small dip in test accuracy. Is this what is being referred to? I don’t see a big decrease in test accuracy.

Hi @S_X, did you run the code

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

multiple times without reinitializing the net?

If a model is trained with too much epoch after its converge, it’s called “overfitting” (http://d2l.ai/chapter_multilayer-perceptrons/underfit-overfit.html).

Hi @goldpiggy,

No, I ran the following:

num_epochs = 30

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

This is the correct way, right?

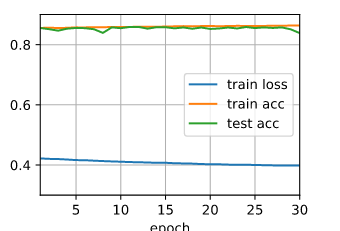

Hi @S_X! Since your initial loss is quite low, it looks like you may run this cell:

num_epochs = 30

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

multiple times with the same defined net.

If it was trained on the same net, the model will continue optimizing on the pretrained net that you ran last time. Hence even though the epoch on the plot looks like “0-30”, it is probably should be “30-60”, “60-90”, etc.

Does it make sense to you?

Hi @goldpiggy

Yes, you are right. I ran with the same net. That explains it.

When I reinitialize the whole notebook and run larger epochs, I can see the test accuracy reduce compared to training accuracy). Thanks for your help!

Result of 30 epochs after re-initializing all the variables:

Great! My pleasure to help!

I am not able to run Huber Loss for regression

We will solve it! Thanks!