http://zh-v2.d2l.ai/chapter_natural-language-processing-applications/sentiment-analysis-rnn.html

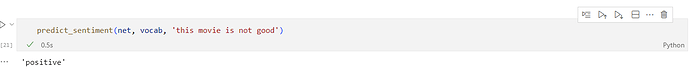

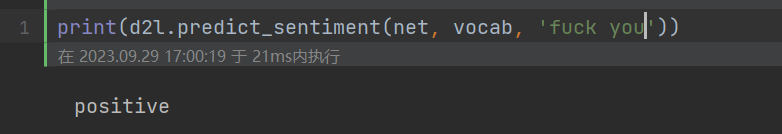

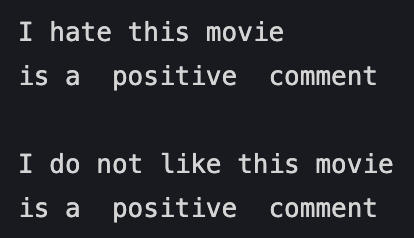

当我第一次运行程序后,得到的结果较差,模型无法分辨部分较为显然的积极与消极的语句,但是在运行第三次后,模型分辨能力显著提升,这是什么原因呢?谁能告诉我原因呢,感谢

When I first run the code ,I got a worse result. The model can’t recognize some obvious sentence,then I ren the code twice,it perforned better a lot then the first training,can some one tell me the reason,thank you.

你需要在这个cell里边,添加代码net.apply(init_weights);刷新一下net的权重,否则每运行一次都是和上次运行的结果去比较。

lr, num_epochs = 0.01, 5

net.apply(init_weights);

trainer = torch.optim.Adam(net.parameters(), lr=lr)

loss = nn.CrossEntropyLoss(reduction="none")

d2l.train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,

devices)

示例程序在m1跑起来超级慢,已经加了device mps,gpu 100%了。几个小时才跑了一个epoch

m2 也跑不过来。改用 3060了,100s

sure thing … you just do more epochs simply on the same model

some more questions:

a. is it possible to apply BERT embedding instead of GloVe there?

确实,我也有同样的问题,在第一次运行,模型几乎没有更新,loss值始终很高;第二次运行就正常了

m3我跑500s,也不算快吧,据说是mps的大矩阵算不好

![]()

我跑示例代码,跑了一天都没跑完,是什么情况。

pytorch代码,一直在跑 d2l.train_ch13。