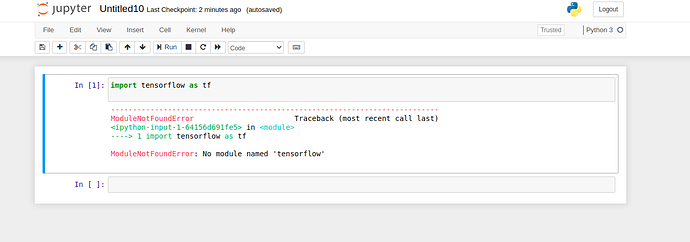

Hi I had an issue while trying to follow with this.

I had installed everythging as per the instructions in the installation chapter, but when I try to import tensorflow in a new jupyter notebook, it gives the following error

Can someone help me with this?

Hi @ikjot-2605, you may need to conda activate d2l before you do jupyter notebook. If you indeed followed the above, check pip list and see if tensorflow is properly installed there.

Hi,

The documentation states that by default the tensor will hold the value of dtype float.

But in TensorFlow, the resulting tensor produces dtype of int32 which I guess is an integer.

" Here, we produced the vector-valued F:Rd,Rd→RdF:Rd,Rd→Rd by lifting the scalar function to an elementwise vector operation." Can someone elaborate on what is meant by lifting?

In our case, instead of calling x.reshape(3, 4) , we could have equivalently called x.reshape(-1, 4) or x.reshape(3, -1). Can someone elaborate more on this?

That means you just put the desired row/column( (3, -1) / (-1, 4) ) shape and leave the other with -1 so that it automatically shapes up the entire matrix.

Section: Saving Memory (Tensorflow)

How will Z be pruned from the computation function, please?

I didn’t understand how the compiler will determine which variables won’t be used.

Section: Conversion to other python objects (tensorflow)

I didn’t understand this line “This minor inconvenience is actually quite important: when you perform operations on the CPU or on GPUs, you do not want to halt computation, waiting to see whether the NumPy package of Python might want to be doing something else with the same chunk of memory.”

Can anyone please explain this?

@Chandan_Kumar

As far as I understand, it means that the tensorflow tensor and the numpy array should not share memory.This is because if they both perform operations on the same memory space then it would lead to consistency issues and errors. Computation are not stopped in this process as neither tensorflow nor numpy have to wait for each other to finish using the shared memory as both use different memory locations.

Hope that answers your question ![]()

Exercises

- Run the code in this section. Change the conditional statement

X == YtoX < YorX > Y, and then see what kind of tensor you can get.

#Ex-1

X = tf.reshape(tf.range(12, dtype=tf.float32), (3, 4))

Y = tf.constant([[2.0, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

#print(X,Y)

X==Y, X>Y, X<Y

Output

(<tf.Tensor: shape=(3, 4), dtype=bool, numpy=

array([[False, True, False, True],

[False, False, False, False],

[False, False, False, False]])>,

<tf.Tensor: shape=(3, 4), dtype=bool, numpy=

array([[False, False, False, False],

[ True, True, True, True],

[ True, True, True, True]])>,

<tf.Tensor: shape=(3, 4), dtype=bool, numpy=

array([[ True, False, True, False],

[False, False, False, False],

[False, False, False, False]])>)

- Replace the two tensors that operate by element in the broadcasting mechanism with other shapes, e.g., 3-dimensional tensors. Is the result the same as expected?

a = tf.reshape(tf.range(6), (3, 1,2))

b = tf.reshape(tf.range(2), (2, 1))

a, b, a+b

Output

(<tf.Tensor: shape=(3, 1, 2), dtype=int32, numpy=

array([[[0, 1]],

[[2, 3]],

[[4, 5]]])>,

<tf.Tensor: shape=(2, 1), dtype=int32, numpy=

array([[0],

[1]])>,

<tf.Tensor: shape=(3, 2, 2), dtype=int32, numpy=

array([[[0, 1],

[1, 2]],

[[2, 3],

[3, 4]],

[[4, 5],

[5, 6]]])>)