I can’t agree more. Transposing a 1-rank tensor returns exactly itself.

Also this code

for i in range(n_train + tau, T):

multistep_preds[i] = d2l.reshape(net(

multistep_preds[i - tau: i].reshape(1, -1)), 1)

can be simply written as

for i in range(n_train + tau, T):

multistep_preds[i] = net(multistep_preds[i - tau: i])I agree. Fixing: https://github.com/d2l-ai/d2l-en/pull/1542

Next time you can PR first: http://preview.d2l.ai/d2l-en/master/chapter_appendix-tools-for-deep-learning/contributing.html

I couldn’t help but notice the similarities between the latent autoregressive model and hidden Markov models. The difference being that in the case of latent autoregressive model the hidden sequence h_t might change over time t and in the case of Hidden markov models the hidden sequence h_t remains the same for all t. Am I correct in assuming this?

Hi everybody,

I have a question about math. In particular what does the sum on x_t in eqution 8.1.4 mean?

Is that the sum over all the possible state x_t? But that does not make a lot of sense to me, because if I have observed x_(t+1) there is just one possible x_t.

Could someone help me in understanding that?

Thanks a lot!

Yes, it’s the sum over all possible state x_t. x_(t-1) is dependent on x_t, however, even when x_(t-1) is observed, x_t is not certain, since there can be different x_t leading to the same x_(t-1).

Hope it helps with your understanding.

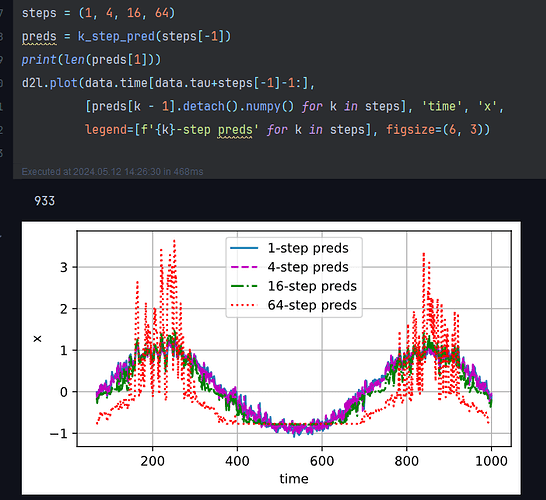

Exercises

1.1 I tried adding tau till 200 but I could not get a good model to be honest.

2. This is just like k step ahead prediction. For short term, it might give good result but it will be bad for long term prediction using the current data as the error will pile up.

3. We can predict in some instance what the next word will be. And in some other cases, we can come up with a range of word which will follow, so yes causality can be somewhat applied in text

4. We can latent autoregressive model when the features is too big and in that case, latent autoregressive models will be useful.

About regression errors piling up, the book says that “We will discuss methods for improving this throughout this chapter and beyond.”

All the chapter seems devoted to text processing models. To find solutions that work well with “k-step-ahead predictions”, do I have to skip ahead to LSTM networks?

Exercises and my stupid answers

Improve the model in the experiment of this section.

-Incorporate more than the past 4 observations? How many do you really need?

(Tried. in a limited way. close to one seems better.)

)

- How many past observations would you need if there was no noise? Hint: you can write sin and cos as a differential equation.

(I tried with sin and cos.Its the same story. `x = torch.cos(time * 0.01)`)

- Can you incorporate older observations while keeping the total number of features constant? Does this improve accuracy? Why?

(Dont get the question)

Change the neural network architecture and evaluate the performance.

(tried. same)

An investor wants to find a good security to buy. He looks at past returns to decide which one is likely to do well. What could possibly go wrong with this strategy?

(Only things constant in life is change)

Does causality also apply to text? To which extent?

(The words do have some causality I believe, you can expect h to follow W at the start of a sentence with a relatively high degree of confidence. But its very topical)

Give an example for when a latent autoregressive model might be needed to capture the dynamic of the data.

(In stock market!)

Also I wanted to ask that

While we do the prediction here

for i in range(n_train+tau , T):

# predicting simply based on past 4 predictions

multistep_preds[i] = net(multistep_preds[i-tau:i].reshape((-1,1))

it does nt work until i do this,

for i in range(n_train+tau , T):

# predicting simply based on past 4 predictions

multistep_preds[i] = net(multistep_preds[i-tau:i].reshape((-1,1)).squeeze(1))

I might be doing something wrong perhaps, but does this need a pull request

Here is th ereference notebook: https://www.kaggle.com/fanbyprinciple/sequence-prediction-with-autoregressive-model/

Thanks for the kaggle notebook which I didn’t know, and which implements the same code of the book, with these improvements:

-

incorporate more than the past 4 observations? → almost no difference

-

remove noise → same problems

So it is a problem with this architecture, and rnn. Probably I should finish the chapter, even if it isn’t about regression, and try to adapt it to regression, and then do the same with chapter 9 :-/ very long task

Thank you Gianni. Its my notebook I implemented. All the best for completing the chapter!

do LSTM models solve some of the problems of my previous question?

A few questions about the MLP:

def get_net():

net = nn.Sequential(nn.Linear(4, 10), nn.ReLU(), nn.Linear(10, 1))

net.apply(init_weights)

return net

-

Is it correct to call this Multi-Layer Perceptron an RNN? Or does calling something an RNN only depend on the having a sliding window training & label set?

-

tau is 4 in this case correct? What do both 10s mean contextually?

A few about the max steps section

- Are you predicting a sequence of length step size, or are you shifting each window by the step size?

I’m confused about this code in Chapter 9.1. If I understand correctly, our FEATURE should be a T-tau fragment of length tua; why is the FEATURE here actually a tau fragment of length T-tau

def get_dataloader(self, train):

features = [self.x[i : self.T-self.tau+i] for i in range(self.tau)]

self.features = torch.stack(features, 1)

self.labels = self.x[self.tau:].reshape((-1, 1))

i = slice(0, self.num_train) if train else slice(self.num_train, None)

return self.get_tensorloader([self.features, self.labels], train, i)

torch.stack converts the features list whose shape is (4, 996) to (996, 4), which means 996 samples with 4 feature

Is this correct? IMO, ‘overestimate’ should be replaced by ‘underestimate’. If we rely on statistics, we are unlikely to meet the infrequent words. So will conclude they have zero or close to zero occurrences. That is why the use of ‘overestimate’ is perplexing.

“This should already give us pause for thought if we want to model words by counting statistics. After all, we will significantly overestimate the frequency of the tail, also known as the infrequent words.”

https://d2l.ai/chapter_recurrent-neural-networks/text-sequence.html

9.1. Working with Sequences

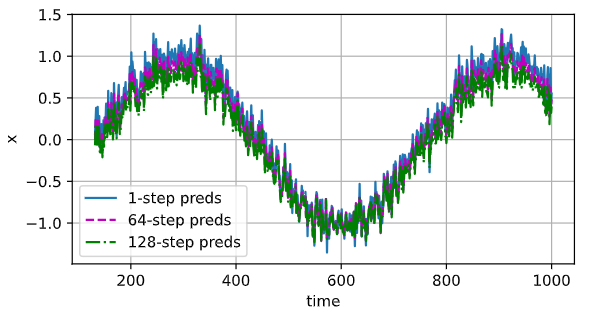

I’m quite amazed but what a bad performance this extra non-linear layer added. Does anyone understand why this is so bad now? (trained for 10 epochs)

"This is all I changed in the net

self.net = nn.Sequential(

nn.LazyLinear(10), # Lazy initialization for an input layer with 4 outputs

nn.ReLU(), # Another non-linear activation function

nn.LazyLinear(1) # Output layer producing 1 output

)

"

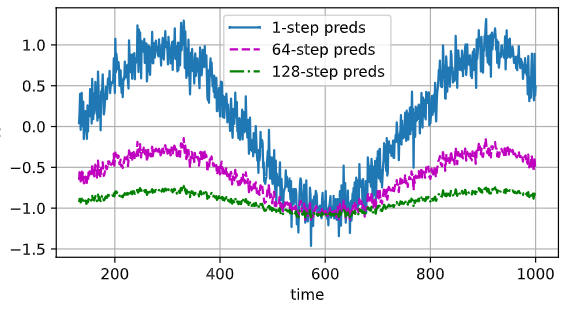

def change(features, preds, i):

features[i+1:, -1] = preds[i:-1, :].reshape(1, -1)

return features

def shift(features, j, i):

features[i+1:, j-1] = features[i:-1, j]

return features

@d2l.add_to_class(Data)

def insert_kth_pred(self, pred, k):

for i in range(1, k):

self.features = shift(self.features, i, self.tau-i)

self.features = change(self.features, pred, k-1)

for i in range(4):

preds = model(data.features).detach()

data.insert_kth_pred(preds, i+1)

trainer.fit(model, data)