Thanks for your answer. But I’m still wondering for something:

-

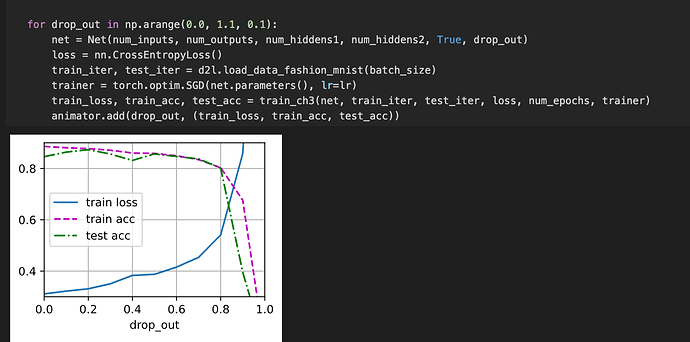

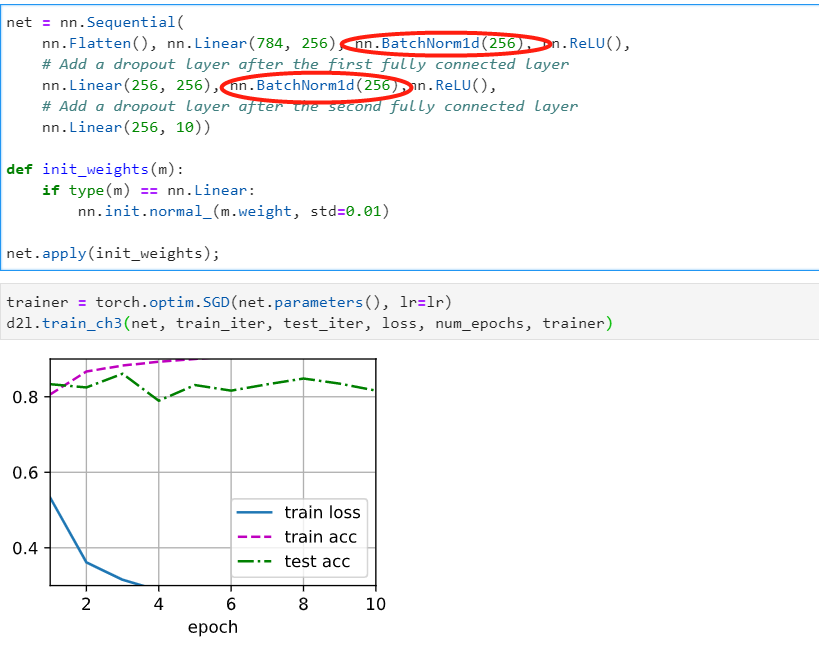

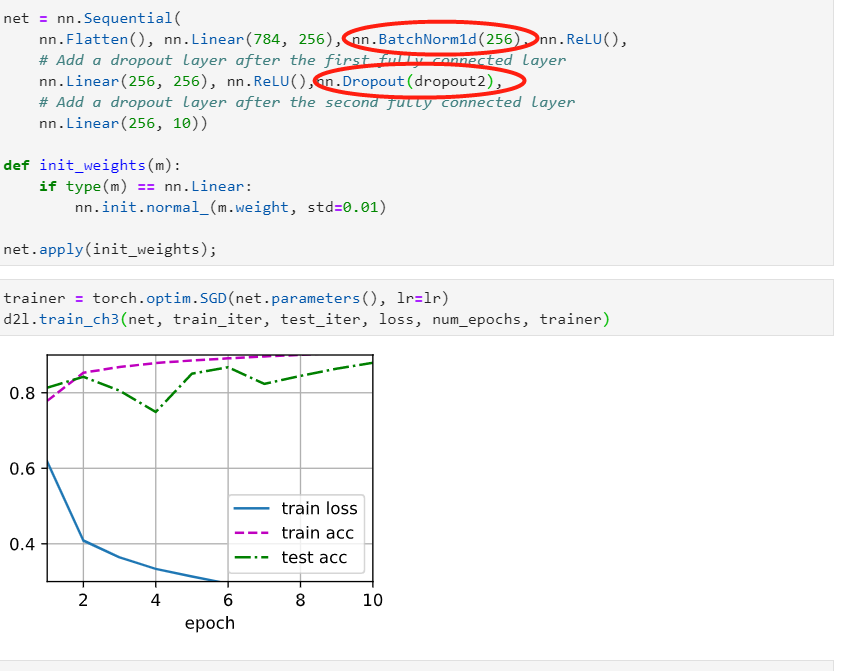

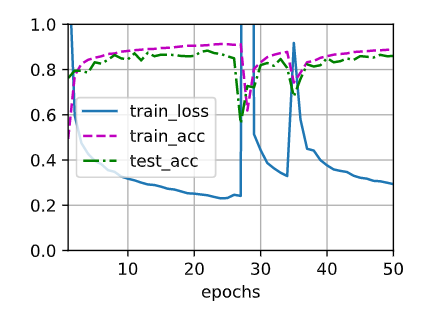

How we disable Dropout in testing in the implementation of this book, either from scratch or using high-level API? I’ve found in the implementation from scratch that during training and testing we use the same

net, whose attibuteis_trainingis toTrue. Also, in the implementation using high-level API, I didn’t find the difference of Dropout layer while training and testing.

So could you please tell me where in the code did we disable Dropout while testing? -

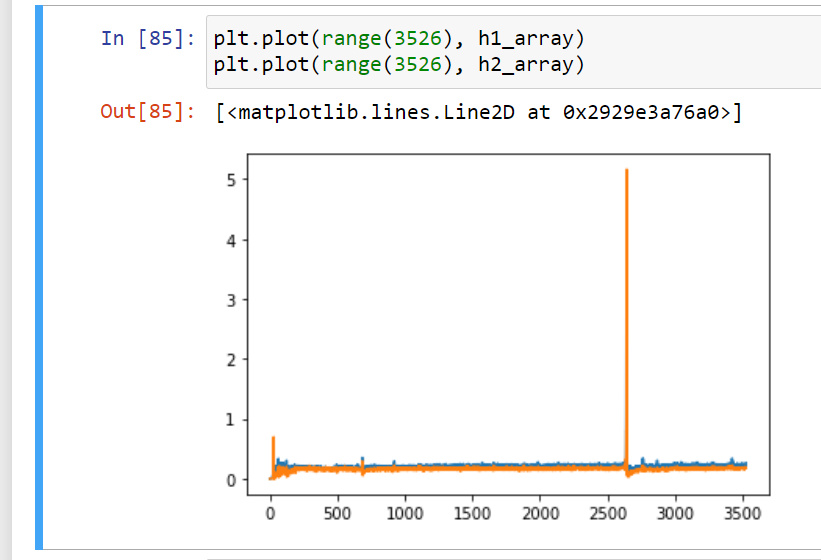

because the weight not only corresponds to those zeros, but also other not zeros in the same hidden layer.

Is this means each weight corresponds to multiple hidden units? I’m not sure about this, but I think each weight corresponds to a unique input (hidden units) and a unique output in MLP.