Hello! I had two questions from this section:

-

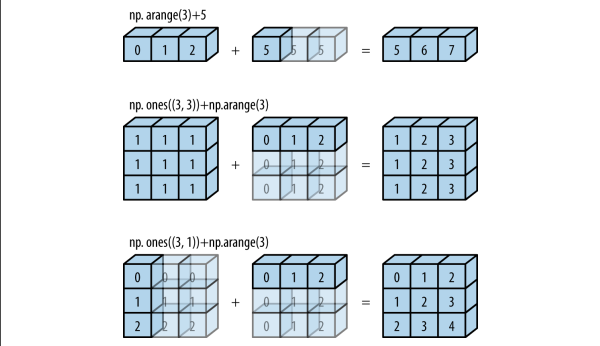

Where does the term “lifted” come from? I understand “lifted” means some function that operate on real numbers (scalars) can be “lifted” to a higher dimensional or vector operations. I was just curious if this is a commonly used term in mathematics.

-

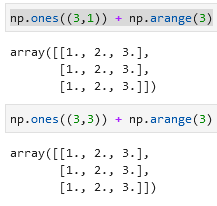

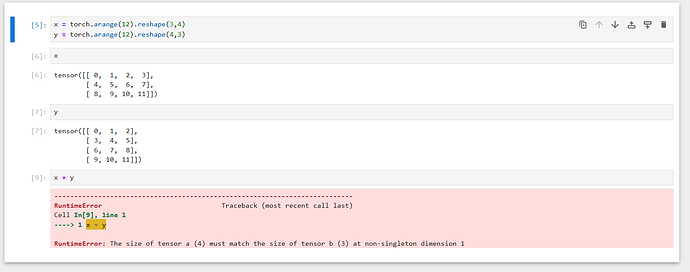

Is there a rule for knowing what the shape of a broadcasted operation may be? For Exercise #2, I tried a shape of (3, 1, 1) + (1, 2, 1) to get (3, 2, 1). I also tried (3, 1, 1, 1) + (1, 2, 1) and got (3, 1, 2, 1). It kind of gets harder to visualize how broadcasting will work beyond 3-D, so I was wondering if someone could explain why the 2nd broad operation has the shape that it has intuitively.

Thank you very much!