http://d2l.ai/chapter_multilayer-perceptrons/mlp-concise.html

There is something strange happening in here, when I change loss to

loss = lambda y_hat, y : tf.keras.losses.sparse_categorical_crossentropy(y, y_hat, from_logits=True)

I got loss more than 1,

The same is happening in previous subsection " Implementation of Multilayer Perceptron from Scratch ", when I change loss to

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

I got loss more than 1

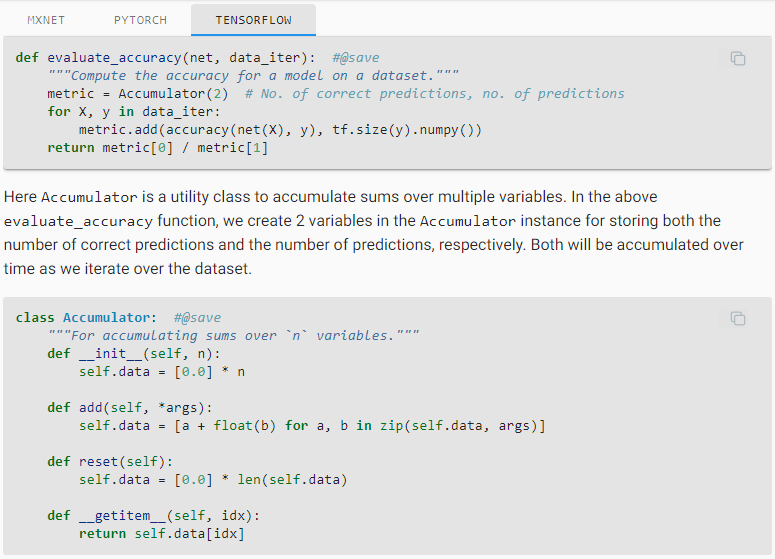

There should be no problems because in d2l.train_epoch_ch3 when calculating loss, we check weather loss is our own defined function or instance of keras loss since keras loss takes argument y_true followed by y_pred. Then why it is happening?

Please have a look at it.

Thank you.

1 Like