http://d2l.ai/chapter_multilayer-perceptrons/mlp-concise.html

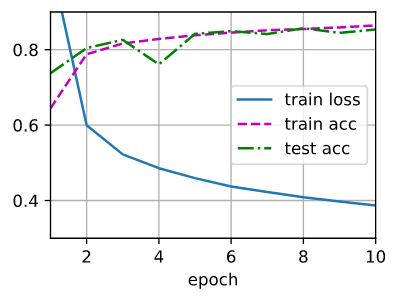

Can someone explain why there is a sudden dip in the plot?

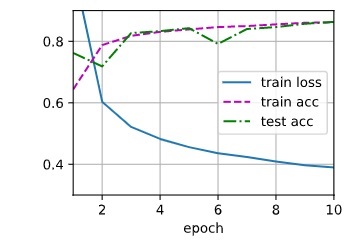

I want to know too, my sudden dip is different than yours

According to that scale, that dip is a loss of accuracy of around 0.05.

The test batch would have behaved differently with the current set of parameters, after that epoch. By chance, it might be the case that the current parameters give an improved accuracy on the training set but reduced accuracy on the test set.

Where that dip happens (it need not happen compulsorily even) depends on your initial state of parameters.

do me too.it’s close to the meaning of ‘overfit’

the same with me. but, i just try runtime > run all. the output is the same with the module. sorry for not answering ur why question.