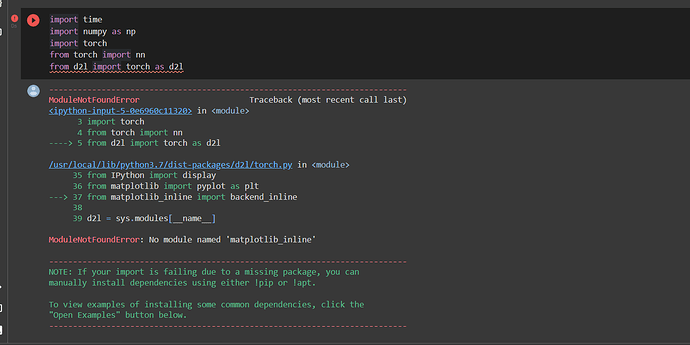

Hello everyone, I am trying to run the codes in this chapter on colab, but it always shows me that error. Of course, I have already installed d2l package with !pip install d2l==1.0.0-alpha0.

pls help me! Thanks

This is because of a recent update in colab i guess, it was working before but now you need to install matplotlib_inline manually. ModuleNotFoundError when running the official pytorch colab notebook · Issue #2250 · d2l-ai/d2l-en · GitHub tracks the problem and we will make a patch release soon to fix it by depending on matplotlib_inline.

For now you can pip install matploblib_inline to fix the bug.

For those who got some issues d2l library. Try this command then restart the kernel

pip install --upgrade d2l==1.0.0a0

There is no need to restart the kernel after the recent release.

Just use d2l==1.0.0-alpha1.post0

Here are my opinions for the exs:

ex.1

Use ? or ?? in jupyter notebook

ex.2

Can’t access atrribute a,b without calling the save_parameters().

By “??d2l.HyperParameters.save_hyperparameters” I got:

def save_hyperparameters(self, ignore=[]):

"""Save function arguments into class attributes.

Defined in :numref:`sec_utils`"""

frame = inspect.currentframe().f_back

_, _, _, local_vars = inspect.getargvalues(frame)

self.hparams = {k:v for k, v in local_vars.items()

if k not in set(ignore+['self']) and not k.startswith('_')}

for k, v in self.hparams.items():

setattr(self, k, v)

As shown in the defination of this method, it firstly iter the parameters to get both the key(k) and value(v) of the inputs, then use setattr() to add each input as B’s feature.

Can someone explain why we cant access attribute a, b without calling save_hyperparameters()?

Is it because the attribute a,b have not been set in init whereas when we call save_hyperparameters() , we have for loop (provided below) to set the attributes

for k, v in self.hparams.items():

setattr(self, k, v)

As you said, without the loop of save_hyperparameters, the attributes are not initialized.

Would love it if someone were to enlighten me with how this piece of code works (part of Trainer fit function)

l = self.loss(self(*batch[:-1]), batch[-1])

How come we can call self()? and what is *batch[:-1]? What does the * mean?

Thank you.

self(X) same as module.forward(X), see https://stackoverflow.com/questions/73991158/pytorch-lightning-whats-the-meaning-of-calling-self

Hello everyone, I am trying to run the code on local, which is first by importing

import time

import numpy as np

import torch

from torch import nn

they works fine, seems like there’s no module corruptions or errors, until I run

from d2l import torch as d2l

that results in the following error

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

Cell In[10], line 1

----> 1 from d2l import torch as d2l

File e:\Code Repository\Laboratory\Lab3\Lab3\Lib\site-packages\d2l\torch.py:6

4 import numpy as np

5 import torch

----> 6 import torchvision

7 from PIL import Image

8 from torch import nn

File e:\Code Repository\Laboratory\Lab3\Lab3\Lib\site-packages\torchvision\__init__.py:6

3 from modulefinder import Module

5 import torch

----> 6 from torchvision import _meta_registrations, datasets, io, models, ops, transforms, utils

8 from .extension import _HAS_OPS

10 try:

File e:\Code Repository\Laboratory\Lab3\Lab3\Lib\site-packages\torchvision\models\__init__.py:2

1 from .alexnet import *

----> 2 from .convnext import *

3 from .densenet import *

4 from .efficientnet import *

...

2000 import importlib

2001 return importlib.import_module(f".{name}", __name__)

-> 2003 raise AttributeError(f"module '{__name__}' has no attribute '{name}'")

AttributeError: module 'torch' has no attribute 'version'

My torch installation is torch-2.3.0 torchaudio-2.3.0 torchvision-0.18.0, run on CPU only, and the d2l version is 1.0.3. Before this, the d2l module also have error within pillow, which I resolved by reinstall it only.

I have tried reinstalling and dependency check, but cannot find anyway to solve this. Please help!

- denotes the starred expression in Python. It unpacks a list into single elements. In this example, you can simply think batch is a list with two variables, the first is the feature vector Tensor, the second is the label vector Tensor. Here, batch[:-1] slices a sublist of batch (but this sublist contains only one element, the feature vector Tensor). So we use the starred expression to convert the sublist to a Tensor.

More details on the starred expression, please refer to Starred Expression in Python - GeeksforGeeks

Did you follow the installation instructions in this page Installation — Dive into Deep Learning 1.0.3 documentation? How about installing torch 2.0.0 and torchvision 0.15.1?

In 3.2.2. Models, this makes sense:

assert hasattr(self, 'trainer'), 'Trainer is not inited'

Whereas this assert message seems backwards:

assert hasattr(self, 'net'), 'Neural network is defined'

Am I reading this incorrectly?

I thought exactly the same when I first read it. It should be something like ‘Neural network is not defined’.

I think the class attribute terminology is somewhat incorrect. It should say instance attribute not class attribute. A class attribute belongs to the class and is shared by all instances of a class whereas instance attribute belongs to an instance.