https://d2l.ai/chapter_natural-language-processing-pretraining/bert-pretraining.html

In the experiment, we can see that the masked language modeling loss is significantly higher than the next sentence prediction loss. Why?

is it because the MLM task much more difficult than the NSP task?

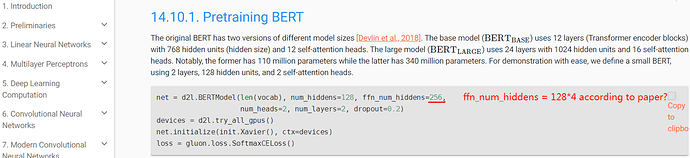

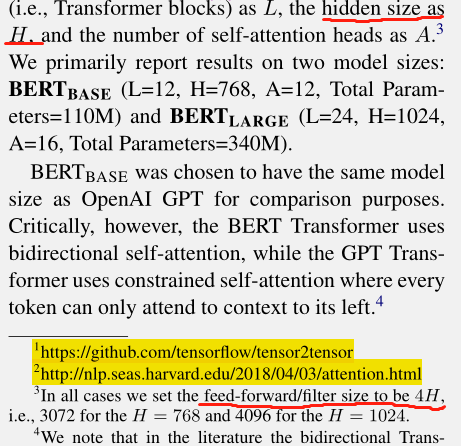

Thanks. This is just a tunable hyperparameter. For demonstration purpose, we define a small BERT and set it to 2H so users can run it locally and quickly see the results.

Hint: NSP is binary classification. How about MLM?

MLM is a multi classification task and the vocab_size is big. In the loss function -logP corresponding to the label could not be optimized as small as in the binary classification task. That’s why the MLM loss much lager than that of the NSP task. Is it right? @astonzhang

2 Likes