https://d2l.ai/chapter_linear-classification/softmax-regression-scratch.html

I have a question related to coding with tensorflow…

in the function train_epoch_ch3, the object updater has method “apply_gradients”

however, i found that this method is not defined in the Updater class…how can it be?

thank you…

I think that’s only the case when the updater is tf.keras.optimizers.Optimizer . In this chapter, we use a custom updater.

In train_epoch_ch3, what is net.trainable_variables referring to? The net function defined earlier in 3.6.3 does not have a trainable_variables field in it.

net.trainable_variables is for the case you use Keras network and would be used in section 3.7 . I agree that it is a bit confusing to write train_epoch_ch3 in a way to work with both sections 3.6 and 3.7

Hi,

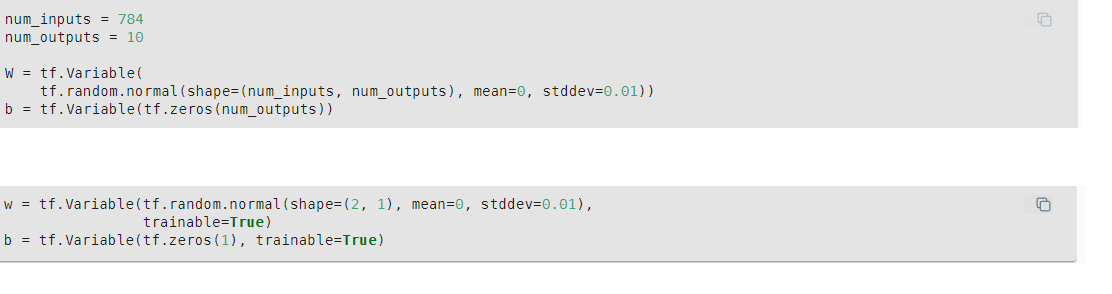

Are we to assume that, all the variables declared by (tf.Variables) are implicitly treated as trainable variables?

I posit this due to the inconsistency while declaring weights and biases variables between linear and softmax implementations?

The linear neural network implementation weights and biases are declared as such where as softmax ones aren’t

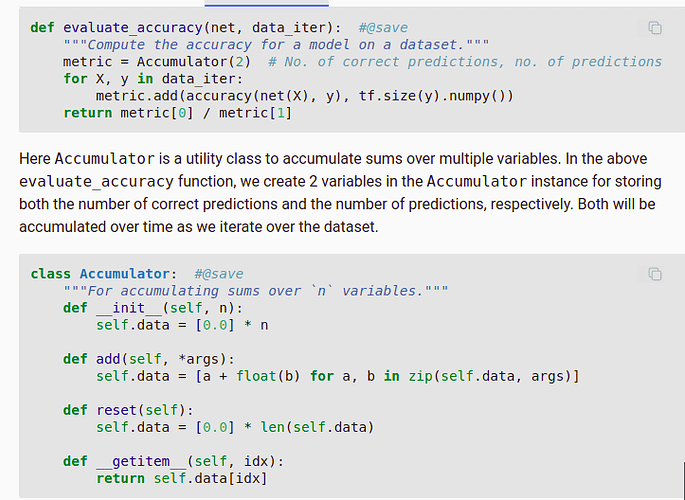

please who can help me understand the lines in the screenshot. I understand how to implement it, i just don’t understand how the whole code unfold

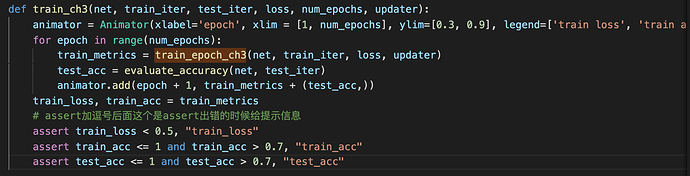

I have questions about the assert part of function train_ch3

The second parameter used by assert keyword is a hint. Maybe it is more proper to change then into string format like below?

Hello,

The hint part in the assert ( after the comma ) doesn’t necessarily have to be a string.

If you put any python object in there it would be printed if the assert clause is True.

In the chapter example the desire is to print the value of the metrics.

Ronaldo