https://zh.d2l.ai/chapter_multilayer-perceptrons/dropout.html

num_epochs, lr, batch_size = 10, 0.5, 256

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

trainer = tf.keras.optimizers.SGD(learning_rate=lr)

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

这里的训练并没有使用上面NET定义的training参数,导致改变dropout参数不起作用?

同感,有谁能确认下

另外,如果想把training参数传进去的话,应该怎么传

trainning参数我这边尝试下这么传的

dropout1, dropout2 = 0.2, 0.5

# dropout1, dropout2 = 0.0, 0.0

class Net(tf.keras.Model):

def __init__(self, num_outputs, num_hiddens1, num_hiddens2, training):

super().__init__() #!! 这句话的作用是?? 继承父类的初始化方法

self.input_layer = tf.keras.layers.Flatten()

self.hidden1 = tf.keras.layers.Dense(num_hiddens1, activation="relu")

self.hidden2 = tf.keras.layers.Dense(num_hiddens2, activation="relu")

self.output_layer = tf.keras.layers.Dense(num_outputs)

self.training = training

def call(self, inputs):

x = self.input_layer(inputs)

x = self.hidden1(x)

# print("x:", x)

# 只有在训练模型时才使用dropout

if self.training:

# 在第一个全连接层之后添加一个dropout层

# print(x.shape)

x = dropout_layer(x, dropout1)

# print("dropout(x):", x)

x = self.hidden2(x)

if self.training:

# 在第二个全连接层之后添加一个dropout层

x = dropout_layer(x, dropout2)

x = self.output_layer(x)

return x

net = Net(num_outputs, num_hiddens1, num_hiddens2, 1)

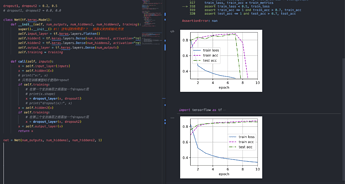

修改后dropout生效后,可以正常迭代训练了: