https://d2l.ai/chapter_attention-mechanisms-and-transformers/attention-pooling.html

Hi,

It’s Watson (not Waston).

(Otherwise, enjoying the book).

A better idea was proposed by Nadaraya [Nadaraya, 1964] and Waston

Hello,

Will tensorflow code be availbe for these sections as well (and in other chapters that miss them)?

Hello, everyone, I hope everyone’s having a good day.

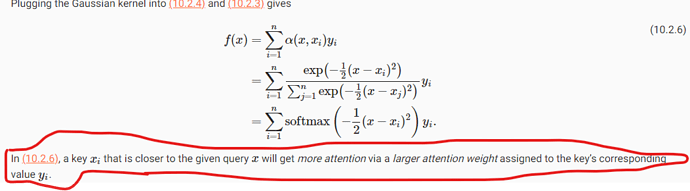

I was wondering why the circled statement is true. Because both the denominator and numerator are negative, the end-result fraction is actually positive. So, should it not be the farther away the query x is, the higher the attention weight?

The denominator and numerator are both positive, because the negative square is passed into exp(). Larger different between xi and x will lead to larger squared difference, and then smaller exp value, and finally smaller weights.