Answers:

- For a sequence it should be a single char value if the batch_size is 1?

- Because we store all such relation as a latent model and while training we learn the weights?

- Vanish?

- Since, it is only char based language model, we can’t learn correlation between words?

Exercises

-

If we use an RNN to predict the next character in a text sequence, what is the required dimension for any output?

dimension equal to text sequence. -

Why can RNNs express the conditional probability of a token at some time step based on all

the previous tokens in the text sequence?

I wonder why. I dont think I ve understood language probability too well. -

What happens to the gradient if you backpropagate through a long sequence?

Reaches 0 -

What are some of the problems associated with the language model described in this section?

We cant find the correlation between the words

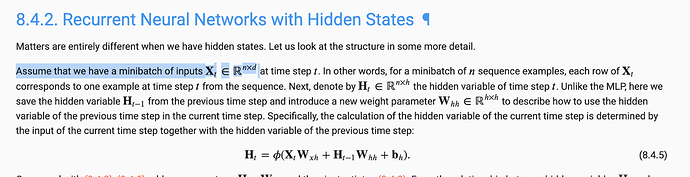

Question on equations (8.4.3) & (8.4.5) wrt the dimensions as they don’t seem to add up, so what is the activation function doing?

For (8.4.3), observe

X_t @ W_xh + b_h = (n \times d) * ( d \times h ) + (1 \times h) = (n \times h) + (1 \times h)

What does the activation function do to the right hand side such that the result is (n \times h)?

Same question for (8.4.5).

Exercises:

-

If each token is represented by a d-dimensional vector in an RNN, and batch-size n = 1,

then output vector is (1 \times q), where q is the number of time steps(?). The d-dimension seems irrelevant. -

We rely on the hidden state to store a seemingly infinite amount of information from previous time steps, i.e., we use the weights matrix to store information allocated from the previous time steps. This is relevant to note because the conditional probability of a token in an RNN is defined using the hidden state at the previous time step. See beginning of 8.4. & 8.4.2.

-

The activation function’s numerical stability will determine what happens to the gradient if one backpropagates through a long sequence. If it’s sigmoid, then it will go to zero (see 4.8.1.1. Vanishing Gradients).

-

The language model described in this section may not do well on longer sequences or properly capture semantic information. (I’ll edit when I figure out why).

For the shape of W_hh, does it have to be a ‘square’, in this case 4 x 4 ?

To multiply the matrices, a 4 x1 W_hh will also do. any reason for that ?

Thanks.

- What are some of the problems associated with the language model described in this section?

- Whh may not capture the latent variable information of long sequences of chars

-

- also, may not capture the latent variable of … i think of examples like people names, all sequences are combinations of almost same words but in different domains.

- we may increase Whh size proportional to sequence length.