StevenJokes

1.When test accuaracy increases most quickly and high, can we say that this hyperparameter is the best value?

1 reply1.When test accuaracy increases most quickly and high, can we say that this hyperparameter is the best value?

1 replyUnless you are sure the given optimization function is convex, we hardly ever say the “best” model or “best” set of hyperparameters.

def net(X):

X = X.reshape((-1, num_inputs))

H = relu(X@W1 + b1) # Here '@' stands for dot product operation

return (H@W2 + b2)

In the last line shouldn’t we have applied the softmax function to the return value H@W2 + b2? Isn’t there a chance that this operation would yield a negative value or a value greater than 1?

When I do use the softmax function, the train loss dissapears and the accuracy suddenly drops to 0. What could be the cause behind this?

In the last line shouldn’t we have applied the softmax function to the return value

H@W2 + b2? Isn’t there a chance that this operation would yield a negative value or a value greater than 1?

We use the loss function to process the output values of net(X), so it does not need to yield a value in (0, 1).

When I do use the softmax function, the train loss dissapears and the accuracy suddenly drops to 0. What could be the cause behind this?

Could you show us the code so that we can reproduce the results?

2 repliesWhat’s your IDE? I’m curious. Thanks.

I think it’s vscode with plugins about viewing notebook

What’s your IDE?

@Xiaomut

I am using vscode, and I can’t find this.

@Kushagra_Chaturvedy

@ccpvirus

We use softmax to calculate probablility first, and then find the max probabillity one.

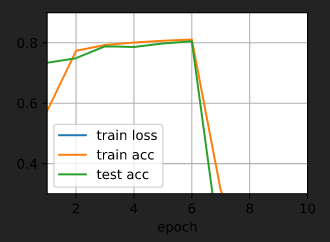

Hi @ccpvirus, as @StevenJokes mentioned we use the “maximum” value across the 10 class outputs as our final label. As softmax is just a “rescaling” function, it doesn’t affect whether a prediction output (i.e., a class lable) is or isn’t the maximum over all classes. Let me know whether this is clear to you. ![]()

Dear all, may I know why we use torch.randn to initialize the parameter here instead of using torch.normal as in Softmax Regression implementation? Are there any advantages? Or actually there are no big differences, we can use both of them? Thanks.

1 replyW1 = nn.Parameter(torch.randn(

num_inputs, num_hiddens, requires_grad=True) * 0.01)

@Gavin

For a standard normal distribution (i.e. mean=0 and variance=1 ), you can use torch.randn

For your case of custom mean and std , you can use torch.normal

Hello. Can you please advise why 0.01 is being multiplied after generating the random numbers?

W1 = nn.Parameter(torch.randn(

num_inputs, num_hiddens, requires_grad=True) * 0.01)

Hi, I have a question on the last question. Which would be a smart way to search over hyperparameters. Is it possible to apply GridSearch, or RandomGridSearch for hiperparameters “like” scikit learn -algorithms???

If not, then how to iterate through a set of hyperparameters?

Thanks in advance for awnssers

Hi

How can i download book in html as like from website?

It is more interative than learning pdf book?

does d2l.ai book consists all course codes in pytorch?

Thanks,  i am starting it

i am starting it

Hi @machine_machine, please check my response at Full pytorch code book for d2l.ai [help]. Thanks for your patience.

torch.randn from pytorch docs is generated with mean 0 and variance 1. We multiply the tensor by 0.01 to scale the parameters to this range.

Ans 2. Initializing with small numbers is required for stable training, not particularly 0 to 1 we can take a distribution from -1 to 1 as well. The only thing to keep in mind for stable training of Deep Neural Nets is that we should set the parameters in a way that avoids exploding gradients as well as avoid vanishing gradients.

My answers

constant.

# increasing number of hidden layers

W1 = nn.Parameter(torch.randn(num_inputs, 128) * 0.01,requires_grad=True)

b1 = nn.Parameter(torch.zeros(128),requires_grad=True)

W2 = nn.Parameter(torch.randn(128, 64) * 0.01, requires_grad=True)

b2 = nn.Parameter(torch.zeros(64), requires_grad=True)

W3 = nn.Parameter(torch.randn(64,num_outputs)*0.01, requires_grad=True)

b3 = nn.Parameter(torch.zeros(num_outputs),requires_grad=True)

def net(X):

X=X.reshape(-1,num_inputs)

out = relu(torch.matmul(X,W1) + b1)

out = relu(torch.matmul(out,W2)+b2)

return torch.matmul(out,W3) + b3

other hyperparameters (including number of epochs), what learning rate gives you the best

results?

number of epochs, number of hidden layers, number of hidden units per layer) jointly?

Thanks

My answers: (I tried to maximize test accuracy)

def net(X):

X = X.reshape((-1, num_inputs))

H = relu(X@W1 + b1) # Here ‘@’ stands for matrix multiplication

return (H@W2 + b2)

For this block of code, I understand we want to use reshape method to flatten the input, but I don’t understand why we need to use the -1 in reshape method to let the computer automatically match the first axis, I think it will be always the shape of (1, num_inputs), so I think there is no need to auto-match the first axis.

Please let me know where my thinking is wrong.

Thank you.

1 replyMy solutions to the exs: 5.2

My exercise:

Q1: when num_hiddens < 4, val_acc decrease dramatically. But when increase num_hidden >> 256, val_acc keep constant.

Q2: new hidden layer with sufficient neurons didn’t affect the result, but with insufficient neurons will decrease accuracy.

Q3: Linear predictor can only use one feature provided by representation, it have not enough information to work properly.

Q4: Too small learning rate, with given epoch, model may not converge; Too large learning rate, model can’t be trained efficiently (accuracy not smooth).

Q5: 5.2 Too large parameters space.

5.3 MCMC?

Q6: TBD

Q7: Didn’t see big difference in my device. But theoretically the speed of well-aligned should be faster?

Q8: Test Sigmoid and Tanh. When other paras fixed, find accuracy: ReLu ~ Tanh > Sigmoid.

Q9: Given enough epoch, don’t matter.