Andreas_Terzis

small typo: “requried” -> “required”

Thanks again for the great resource you’ve put together!

small typo: “requried” -> “required”

Thanks again for the great resource you’ve put together!

The purpose of backward propagation is to get near to, even same as the labels we gave.

We only calulate gradients when we know the distance of prediction and reality.

This is the backward propagation what really does.

Hi @RaphaelCS, great question! Please find more details at https://mxnet.apache.org/api/architecture/note_memory to see how we manage memory efficiently.

3 repliesThanks for the reference !

I want to ensure some kind of achievement about back propagation which is equivalent to the normal way from my perspective. I think we could do merely the partial derivation and value calculation during the forwarding pass and multiplication during the back propagation. Is it an effective, or to say another equivalent way to achieve BP? Or they are different from some aspects?

1 replyI think we could do merely the partial derivation and value calculation during the forwarding pass and multiplication during the back propagation.

What is different with BP?

1 replyBP do the partial derivation during back propogation step rather than the forwarding step? I just want to ensure wether these two methods have the same effect. Thanks for your reply.

1 replyThe most important thing, in my opinion, is to find out which elements we need to calculate the partial derivation. Back propogation is only the way to find it. Am I right?

1 replyI agree with you and now I think two ways mentioned above are actually the same .

In my opinion, we can calculate the partial derivative of h1 w.r.t. x and the partial derivative of h2 w.r.t.h1, then we can obtain the partial derivative of h2 w.r.t. x by multiplying the previous two partial derivatives. I think these calculations can all be done in forwarding steps. (x refers to input, h1 and h2 refer to two hidden layers)

If I’m not mistaken, we can keep calculating in this manner until the loss, thus all in forward stage.

BTW, perhaps this method requires much more memory to store the intermediate partial derivatives.

1 replyGreat discussion @Anand_Ram and @RaphaelCS ! As you point out the memory may explode if we save all the immediate gradient. Besides, some of the saved gradients may not require for calculation. As a result, we usually do a full pass of forward propagation and then backpropagate.

This link was very interesting in explaining some of mxnet’s efficiency results.

all figures are missed at that link, is it the problem w.r.t. my network env?

After looking at: https://math.stackexchange.com/questions/2792390/derivative-of-euclidean-norm-l2-norm

I realize:

d/do l2_norm(o - y)

d/do ||o - y||^2

d/do 2(y - o)

Here’s a good intuitive explanation as well from the top answer at the above link (x is o here):

“points in the direction of the vector away from y towards x: this makes sense, as the gradient of ∥y−x∥2 is the direction of steepest increase of ∥y−x∥2, which is to move x in the direction directly away from y.”

but i wonder know that why you add l2 on (o-y)? i think (o-y) is the derivative of ∂l/∂o while L2 is used in the bias whic like ||W||^2,so i think it’s a constant item .thanks

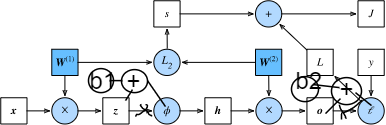

I can understand equation 4.7.12, as shown below, and the order of the elements in the matrix multiplication makes sense, i.e. the derivative of J w.r.t. h (gradient of h) is the product of the transpose of the derivative of o w.r.t. h (which is W2 in this case) and the derivative of J w.r.t. o (i.e. the gradient of o).

我可以理解等式 4.7.12, 也就是说,损失J对h的偏导数,也即h的梯度,等于h对于o的偏导数的转置与损失对于o的偏导数,也即o的梯度的乘积。

This is consistent with what Roger Grosse explained in his course that backprop is like passing error message, and the derivative of the loss w.r.t. a parent node/variable = SUM of the products between the transpose of the derivative of each of its child nodes/variables w.r.t. the parent (i.e. the transpose of the Jacobian matrix) and the derivative of the loss w.r.t. each of its children.

这与Roger Grosse的课程里对反向传播的解释是一致的,也就是说,反向传播是在传递误差信息,而损失对于当前父变量的导数,也即该父变量的梯度,等于所有其子变量对父变量的导数的转置,也即雅可比矩阵的转置与损失对于相应子变量的导数,也即子变量的梯度的乘积之总和。

However, this conceptual logic is not followed in equations 4.7.11 and 4.7.14 in which the “transpose” part is put after the “partial derivative part”, rather than in front of it. This could lead to problem in dimension mismatching for matrix multiplication in code implementation.

但似乎这一概念逻辑在等式 4.7.11和4.7.14中并没有被遵从,其中的雅可比矩阵转置的部分被放在了损失对子变量的导数的后面。这会导致在矩阵乘积运算中维度不匹配的问题。

Could the authors kindly explain if this is a typo, or the order in matrix multiplication as shown in the equations doesn’t matter because it is only “illustrative”? Thanks.

所以,可否请作者们解释一下,这是否是文字排版错误,抑或这个“顺序”在这些作为概念演示的等式里并不重要? 谢谢。

include bias in the regularization term).

Draw the corresponding computational graph.

Derive the forward and backward propagation equations.

section.

graph? How long do you expect the calculation to take?

Assume that the computational graph is too large for your GPU.

I cannot answer your question but I am in awe how rigorously you have asked your question!

I wrote it as gently as I could. Hope it helps you with your struggle with mathematical derivation.

As far as I can see the notation - do / dW(2) in (4.7.11) is a vector-by-matrix derivative. Where can I read about how do you interpret such a notation? The wikipedia article (" Matrix calculus") says “These are not as widely considered and a notation is not widely agreed upon.” in a paragraph “Other matrix derivatives”. Tried other sources, haven’t found anything.

In exercise 4 there is mentioning of second derivatives. Curious what are some examples when we want to compute second derivatives in practice?

My solutions to the exs: 5.3

I’m late to this but I did the math and I think you are correct, the “transpose of h” should be put in front of the “partial derivative part”.

My exercise:

If folks still confused take a look at